The Instagram application for Android has become one of the most popular social media platforms in the world today. With over 300 million monthly users across all platforms, and over 100 million individual downloads on the Google Playstore, you’d expect Facebook’s snapshot-sharing application to boast with quality in both design and functionality. Unfortunately, for a big chunk of its userbase - that is, the Android instagrammers - Instagram falls short in doing a good job at the only thing it is supposed to do - sharing pretty pictures.

While iOS users can share their creations and moments with high fidelity, Android users have been reporting extreme quality loss in their pictures for years now. One of the oldest threads complaining about such a headache was found here on XDA in September 2012, and the thread has been running up until now, providing readers with unorthodox ways to try and get around Instagram’s ridiculous destroying of photos. You’d think that after over two years of complaints, technological improvements in both software and hardware, and economic and market growth, Instagram would have addressed these issues. Is it something they are to blame for? Or does the underlying cause of the problem run deeper than it seems?

A Direct Look

Here is a picture I took myself. The original shot weighs 4.34MB and was shot at 9.6MP. To not account for the "Instacrop" downsampling that would understandably destroy the detail of such a high-resolution file by later downscaling it to Instagram’s native 640x640 pixel output, I cropped it to a JPG of the 1:1 aspect ratio of Instagram uploads to see the direct effects of the post-processing algorithm and its compression on this file.

I simply grabbed the squared JPG crop and posted it on my Instagram without any added filters, effects, or tweaking any values. You’d expect the image to come out looking rather similar to what was seen originally, but the result was underwhelming. The compression artifacts around borders and color gradients are stunningly noticeable to even the untrained eye. While the original 1:1 crop file’s size was 1.6MB, the new resized and compressed image is 125KB. This means that the compression reduced the file size of the original by a factor of almost 13 - which isn't necessarily bad in some contexts.

Interestingly, Instagram offers a “High Quality Image Processing” that is off by default, but when turned on, the results do not seem to truly improve, and the compressed file sits at 129KB. Here I provide you with the same crop and you can see that the compression present is still rather intense and the image’s fidelity still features a coarse and pixelated loss.

Compression

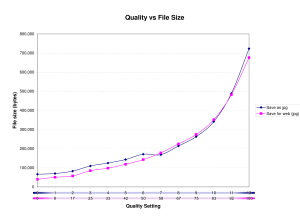

Computer algorithms offer various ways of reducing the size of an image with different techniques that optimize the

data needed to later be interpreted and display the appropriate picture in the appropriate way. Many file types for images are tightly associated with these compression techniques that they support or do not support - and this is why we typically see better quality on some types of pictures than others. PNG (Portable Network Graphics) files are usually used to share media without losing fidelity and picture quality, at the expense of a larger file size than images that undergo lossy compression. GIF is a very old image format that also is compressed losslessly.

Techniques to reduce or optimize file size are learned by many programmers in their academic pursuits regardless of the field they will develop in. Names such as deflation (used for PNG) or the Lempel-Ziv-Welch algorithm (typically used for GIF) run through many Computer Science classrooms nowadays, ring in many programmers’ ears, and with the further development and documentation of increasingly more efficient compression techniques, you’d expect the billionaire platform to incorporate reasonably efficient algorithms to output a very nice picture while making the technicalities not too taxing on their servers and the user’s hardware.

But this simply isn’t the case. The pictures that millions of Instagrammers, and I, take and upload every day directly contradict the narrative of the engineering prowess of these superpowers of the tech world, who are supposed to pump a big fraction of their revenue on investing back into their software to provide the best user experience. But there is still a question left unanswered here: Why Android and not iOS?

VSCO and Android Memory

While popular internet forums like Reddit were trying to figure out the cause of their daily outrage, the injustice seemed to have no logical foundation other than the possible explanation of Android hardware being intrinsically inferior in the computing power department, or the fact that, given the vast array of lower-end Android devices, these measures had to be taken to ensure a consistent user experience across the entire platform - regardless of how much you paid for your phone. As the months went by, the reports after every Instagram update kept reporting the same issue, to the point where this problem became a running gag amongst forum users that shortly followed every iteration of the application.

Users also noticed a similar occurrence with the popular camera and picture editing application VSCO Cam. Touted “the new standard of Android Photography”, some were quick to notice that the application fell short on these claims. The quality loss and type of artifacts noticed were similar to that of Instagram, so some were quick to think that there was a line uniting the dots. Up until now, we only had speculation as to what the reason for this problem could be. Some blamed the problem directly on Android's built-in bitmap downsampling algorithms. However, what seemed to be the most compelling cause that had surfaced was the simple fact that Instagram, and possibly VSCO, had a poor implementation of a downsampling algorithm, specifically the Nearest Neighbor resampling. But without the official word from developers, the speculation couldn’t be fully confirmed.

It was then that we learned through VSCO’s technical support that the reason for their loss of resolution and fidelity was not a poor software implementation, but rather a memory constraint in Android devices:

“Most Android devices are quite constrained in memory despite some having upwards of a few gigabytes of memory, but applications are not allowed to use all of that available memory and thus we have to make due with what is given to us from Android.”

“Large images may be downsized up to 50% when importing depending on the device you are on and available memory.“

They also claim that it is their image processing techniques being very taxing on both memory and SoC, and this, coupled with Android memory limitations, is why we see the quality bottleneck that we don’t find on iOS.

According to Android’s developer training articles, a hard limit is set on the heap size for each app to maintain a functional multitasking environment. This depends on how much RAM the device has available, and if the app approaches heap capacity, it runs the risk of running out of the RAM.

So at first glance, it seems that VSCO’s story is compelling, but this doesn’t explain some of the things that the people taking a skeptic’s approach can’t seem to shake off.

Limitation

On a very superficial glance, we can ask this question: If a smartphone that typically has between 1GB and 2GB of RAM and the latest in portable processors can’t process an image in full resolution, why are 32MB RAM DSLR cameras capable of that?

We reached out to one of our Senior Recognized Developers to gather a stronger opinion on this issue. OmniROM developer XpLoDWilD commented:

“The limitation here is rather the way the image is computated or processed. GPU is faster for that, and the fastest way to do it is by 'uploading' the image into the GPU as a texture and process it - the issue with it being that you are limited by the GPU maximum texture size, which is generally 4096x4096.”

In general, 8MP pictures are 3264x2448, small enough to fit into the limits of up to 12MP of 4000x3000. Newer flagship and camera-phone sensors can go upwards of 13MP and do have picture sizes larger than the maximum GPU maximum texture size, which would inescapably need to downscale the image within the constraint and lose overall detail.

“The issue is not that apps are uploading a downscaled version, however, it is rather that apps are processing a downscaled version of the image, and uploading that processed file”, he added. “Most probably to reduce the processing time further, they also set the resolution even lower”.

XpLoDWilD suggests that the fine balance between processing time and GPU constraint would be, rather than showing the user a fully processed preview of the image they are working on, have the visual aid for the editing process be a downscaled thumbnail that can fit on the screen (something smaller than 2048x2048). This thumbnail could generally be processed reliably fast, while still giving the user a good estimation of how the picture will look. When the user confirms the choices he made on the value adjustments and filter selection, the full resolution picture would be transformed in the background - by splitting the image in a grid of the thumbnail resolution size, and then processing each block separately. The final step would entail compositing the final image on the CPU by fitting each region back into one large full-resolution bitmap.

That is one way to process the image in the original resolution. This is something that Instagram doesn’t appear to do, given that the preview you see, all the way up to the moment you process the picture, doesn’t feature the same terrible quality and artifacts of that of the ready-to-upload picture. The preview image doesn't seem to undergo the brutal compression, so the compression takes place at the moment of processing the final image - which outputs the low-quality image.

The Android platform really has no issues processing an image in high full-resolution and much less uploading it. On the hardware side, the latest iPhones have a 2048 to 4096 texture size limit. So it is probably not a hardware limitation, and it is not a platform limitation - as it can, and has been, worked around by other developers.

But there was a cap to the heap size, though!

Yes, but not quite. There is a reasonable limit on the Java heap, because of the additional memory needed for high density images. After some research I found this snippet of debate on a Google group discussing the Android NDK, or Native Development Kit, which allows developers to re-utilize code written in C/C++ by introducing it in applications through Java Native Interface, making the execution of the app somewhat faster as it's directly interpreted on the processor instead of a virtual machine.

In the conversation, that can be found here, Google engineer and Android Framework Developer Dianne Hackborn clears some misconceptions about Android’s memory constraints. She notes that, “given that this is the NDK list, the limit is actually not imposed on you, because it is only on the Java heap. There is no limit on allocations in the native heap... “. As far as the RAM usage, she comments: “If there is enough RAM, the data will be kept in RAM. If not... well, you still run”.

She also says that not only is there no limit on the native heap, but neither is there one for the GPU heap. So it seems that there are really no restrictions “imposed” by Android as a whole as to how much memory, general processing, or GPU you can use, because of the NDK’s existence.

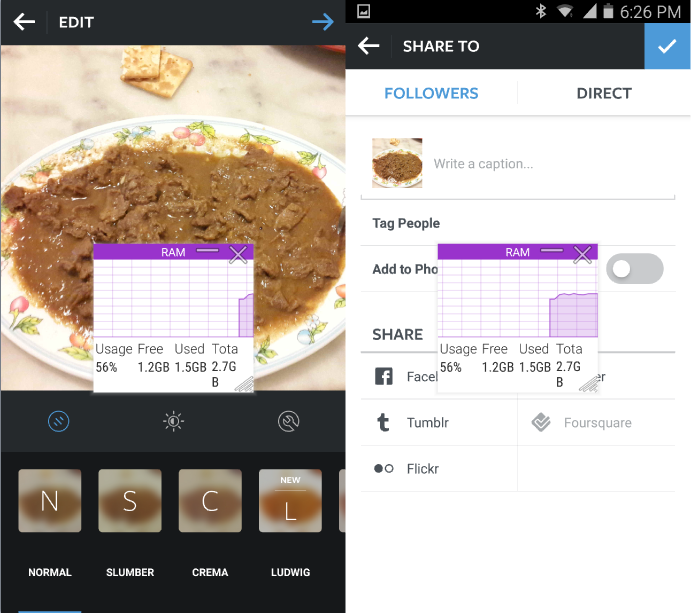

But even then, the Java heap should be large enough for one picture . A 13MP picture as an uncompressed bitmap (ARGB 8888) would take approximately 50MB. The default max heap size ranges up to 256mb or 512mb depending on the Android device and the Android version it is running. Instagram takes about 62MB when idle, and as judging from my System Monitor graph, the RAM use increase during the retrieving and the processing of a 13MP image seems to be negligible, and certainly nowhere near close the limit supposedly “imposed by Android”, which can be worked around regardless, and it can also be avoided or mitigated by using certain algorithms over others.

. A 13MP picture as an uncompressed bitmap (ARGB 8888) would take approximately 50MB. The default max heap size ranges up to 256mb or 512mb depending on the Android device and the Android version it is running. Instagram takes about 62MB when idle, and as judging from my System Monitor graph, the RAM use increase during the retrieving and the processing of a 13MP image seems to be negligible, and certainly nowhere near close the limit supposedly “imposed by Android”, which can be worked around regardless, and it can also be avoided or mitigated by using certain algorithms over others.

Conclusion

Like previously mentioned, we may never know the full story on what goes on behind the scenes of these apps. But the justifications made by their makers’ responses or those of their apologists simply don’t seem all that plausible upon close inspection. The issue here seems to be caused by mediocre software implementation rather than whatever limitation Android’s hardware or software could seemingly provide.

The fact that there are applications out there that work around the compression, plus the existence and content of documentation on Android’s inner workings, the potential of current Android hardware and the opinion of experts, all seem to point towards the injustice Android users face being either deliberate or at the very least acknowledgedly solvable.

I think it is time for Android users to not only get the truth, but also the treatment they deserve. While it could be that Android devices, en masse, as an average and median are below iPhones when it comes to hardware, there is no reason to lower the standards and ruin everyone’s user experiences over it. And with each developer giving the platform second hand leftovers, users increasingly focus their frustration on the developers rather than the system - like it should be.

Credit to PixelPulse for featured image