The GPU is perhaps the most exciting part of a gaming PC: it provides the power necessary to increase resolutions, visual detail settings, and can easily be upgraded generation after generation. It's also the traditional arena where Nvidia and AMD duke it out for commercial and technological supremacy (though the fight has been shifting to the datacenter in recent years). These two companies have often fought tooth and nail every generation, sometimes producing graphics cards that aren't merely great, but truly decisive in changing the direction of the industry.

It's extremely difficult to choose even a dozen historical graphics cards; over the industry's 30-year history, you could suggest over 20 game-changing GPUs, and it would simply take too long to analyze each one. I've identified the seven most important Nvidia and AMD graphics cards from the past 20 years and how they each marked a significant turning point.

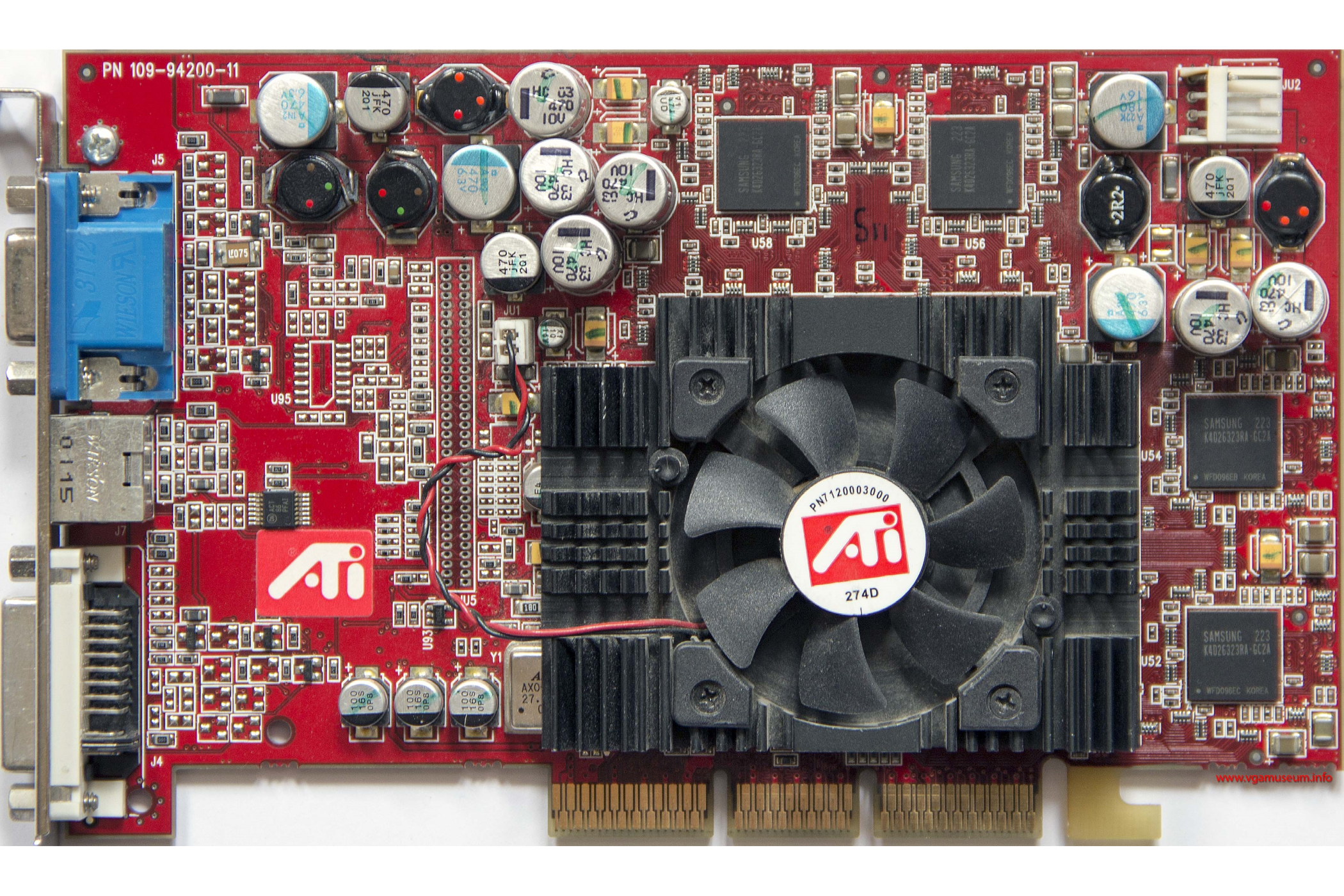

ATI Radeon 9700 Pro: Defining what it means to be legendary

When the graphics industry was first emerging, it wasn't a duopoly like it is today, and the principal players in the 90s were Nvidia, ATI, Matrox, and 3dfx. By the time the GeForce 256 released in 1999 (which Nvidia questionably asserts was the first GPU), Matrox exited the gaming market and 3dfx was in the process of selling most of its intellectual property to Nvidia. ATI was the last company that could stand up to Nvidia, and the odds didn't look good. The company's Radeon 7000 and 8000 GPUs fell short of Nvidia's own GeForce GPUs, and if ATI couldn't compete, it might repeat the fate of Matrox and 3dfx.

In order to win, ATI decided to do something radical and make a GPU far larger than normal. Most GPU chips had an area of 100mm2, but when developing its R300 processor, ATI decided to make it over 200mm2. Larger GPUs are much more expensive to produce, but the performance benefits were immense. The R300 GPU had over 110 million transistors, easily making Nvidia's top-end GeForce4 with its 63 million transistors look tiny by comparison.

R300 powered the Radeon 9000 series, which debuted with the Radeon 9700 Pro in 2002. The 9700 Pro totally decimated Nvidia's GeForce4 Ti 4600 in pretty much every game, and in some cases beat Nvidia's flagship by a factor of 2 or more. Despite being $100 more expensive than the Ti 4600, publications like Anandtech gave glowing recommendations for the 9700 Pro. The success of ATI's big GPU gave the company some much-needed reprieve from Nvidia, but not for long.

Nvidia GeForce 8800: The only card that mattered

The 9700 Pro really set the stage for the next two decades of graphics cards, and it became clear very quickly that new top-end GPUs needed to be big. Nvidia and ATI followed up R300 with 200mm and even 300mm2 sized chips, and although it was ATI that started this arms race, eventually Nvidia regained performance leadership with its GeForce 8 series in 2006, which debuted with the GeForce 8800 GTX and 8800 GTS.

The flagship 8800 GTX was anywhere from 50% to 100% faster than ATI's top-end Radeon X1950, resulting in a victory that Anandtech said was "akin to what we saw with the Radeon 9700 Pro." The upper midrange GeForce GTS meanwhile could tie the X1950 in some titles and beat it by 50% in others. Even at $600 and $450 respectively, the 8800 GTX and 8800 GTS received glowing praise.

But the real star of the GeForce 8800 family was the GeForce 8800 GT, which launched in late 2007. Despite the fact that it was just a slightly cut-down 8800 GTX, it sold for around $200, about a third of the price of the top-ter model. It made basically every other Nvidia and ATI GPU obsolete, even the newest Radeon HD 2900 XT. What Nvidia did with the 8800 GT was shocking, and it's unthinkable we'll ever see such a thing ever happen again given the current state of the market.

AMD Radeon HD 5970: The modern graphics rivalry is born

After the GeForce 8 series, Nvidia enjoyed a period of dominance over ATI, which was acquired by AMD in 2006. The Radeon HD 2000 and 3000 series were already planned out when the acquisition happened, which meant AMD could only start changing the direction of its new graphics unit from the HD 4000 series onwards. AMD observed the dynamic between Nvidia and ATI, and ultimately rejected the large GPU status quo that had persisted since the Radeon 9000 series. ATI and AMD's new plan was called the "small die strategy."

The basic philosophy that went into the HD 4000 and 5000 series was that big GPUs were too expensive to design and produce. Smaller GPUs could get most of the performance large GPUs had, especially if they used the latest manufacturing process or node. If AMD wanted a superfast GPU, it could rely on its CrossFire technology, which allowed for two cards to work together. The small die strategy debuted with the HD 4000 series, and although it didn't beat the GTX 200 series in performance, AMD's smaller GPUs were just about as fast while costing almost half the price.

The HD 5000 series in 2009 not only iterated on the small die strategy, but also took advantage of Nvidia's struggles with getting its disastrous GTX 400 series out the door. The HD 5870 was AMD's fastest GPU with a single graphics processor, and it beat Nvidia's flagship GTX 285, which was beleaguered due to its old age. The HD 5970, however, was a graphics card with two of AMD's top-end graphics dies, which resulted in a GPU that was literally too fast for most users. Nvidia's woefully inadequate GTX 400 ensured that the HD 5970 would remain the world's fastest GPU until 2012, a reign of roughly three years.

AMD's small die strategy, while momentarily successful, was ultimately abandoned after the HD 5000 series. After all, if Nvidia's GTX 400 GPUs were good, then HD 5000's small size would have been a big problem. From this point on, AMD would still prioritize getting to the newest node but wouldn't shy away from making large GPUs. Nvidia meanwhile would often stay on older, mature nodes to both save money and to produce large GPUs in a cost-effective way. These tendencies have mostly held up to the present day.

AMD Radeon R9 290X: Radeon's swan song

After HD 5000 and GTX 400, the dynamic between AMD and Nvidia began to shift and become more balanced. AMD's HD 6000 series didn't really improve much on HD 5000 while Nvidia's GTX 500 series solved the performance and power efficiency issues of GTX 400. In 2012, the HD 7000 and GTX 600 series fought to a draw, though AMD did get the glory for launching the world's first 1GHz GPU. At that time, AMD and Nvidia were both using TSMC's 28nm node, and AMD would have liked to upgrade to the 22nm node in order to pull ahead.

That's what AMD would have done were it not for the fact that virtually every silicon manufacturer in the world was having issues progressing beyond 28nm. In 2013, AMD couldn't rely on a process advantage and both companies once again launched brand new GPUs on TSMC's 28nm node, which resulted in some of the largest flagship GPUs the industry had seen to date. Nvidia beat AMD to the next generation with its $1,000 GTX Titan and $650 GTX 780, which were incredibly fast GPUs that had a die size of 561mm2 (large even by current standards).

The R9 290X launched a few months later and almost delivered a decisive blow to Nvidia's shiny new GPUs. Despite its relatively small die size of 438mm2, it was able to beat the 780 and match the Titan for just $550, making the 290X "a price/performance monster" according to Anandtech. It would have been another HD 5970 or 9700 Pro were it not for a key weakness: power consumption. The 290X consumed way too much power and the reference card was simply too hot and loud. Ultimately, this situation would prove to be intractable for AMD, and its victory would be short-lived.

Nvidia GeForce GTX 1080: Nvidia becomes the face of modern graphics

In the years after the 290X launched, the dynamic between Nvidia and AMD changed dramatically. Ever since the HD 5000 series, AMD had always managed to keep up with Nvidia and vie for the number one spot, but financial issues began to plague the company for a variety of reasons. When Nvidia launched its new GTX 900 series in 2014, new GPUs from AMD were nowhere to be found. AMD did finally launch new cards in 2015, but most of them were just refreshed 200 series cards while the top-end R9 Fury X failed to recreate the magical R9 290X moment.

Meanwhile, TSMC finally launched its new 16nm node in 2015, which meant the next generation of GPUs could at last get a much-needed upgrade. Under normal circumstances, this would have been to AMD's advantage, and it might have used the 16nm node as soon as it came out were it not for the fact that the company was on the brink of going bankrupt. Instead, it was Nvidia who made it to 16nm first in 2016 with its GTX 10 series, fundamentally identical to the GTX 900 series aside from using the 16nm node.

The flagship GTX 1080 made it clear how much the silicon industry was lagging behind without new nodes to upgrade to. Compared to the top-end 980 Ti from 2015, the 1080 was half the size, used a quarter less power, and was 30% faster on average. That's a crazy level of improvement for just a single generation, especially since the 900 series was also seen as a big improvement over its predecessor. Nvidia achieved such a monumental victory over AMD that gaming GPUs almost became synonymous with GeForce.

The 10 series is perhaps the greatest family of graphics cards ever released, and it's also unique for being the last great generation of GPUs before the market took a turn for the worse. The GTX 1080 Ti is six years old at the time of writing and yet it still provides a midrange to high-end gaming experience; for reference, in 2017 the six-year-old GTX 680 was hardly even midrange anymore. PC enthusiasts almost universally have nostalgia for the 10 series, which launched at a time when GPUs didn't cost four digits.

Nvidia GeForce RTX 3080 10GB: Nvidia throws us a bone

The awesomeness of the GTX 10 series was immediately followed by the infamously disappointing RTX 20 series in 2018. These GPUs basically offered little to no significant performance improvements over the 10 series until the Super refresh came out in 2019. Nvidia wanted to rely on its innovative ray tracing and AI technologies to obliterate AMD (which had none of that), but an acute shortage of games that actually used ray tracing and deep learning super sampling (or DLSS) made the 20 series just a bad deal in general.

In order to provide a better next-gen product and head off the imminent threat of AMD's own upcoming next-gen GPUs, Nvidia needed to focus on delivering clearly better performance and value. Crucially, Nvidia teamed up exclusively with Samsung and left TSMC, its traditional partner. By using Samsung's 8nm node, Nvidia was leaving some performance and efficiency on the table since TSMC's 7nm was better. However, since Samsung had less demand for processors, it's highly likely Nvidia got a great deal for making its upcoming GPUs.

The RTX 30 series launched in late 2020 with the RTX 3090 and 3080 leading the charge. The 3090 with its $1,500 price tag was basically pointless; the $700 3080 was the real star of the show. It was a straight-up replacement of the RTX 2080 with 50% more performance on average at 1440p, which to Techspot was "a hell of a lot better than what we got last generation." The 30 series also benefited from increased usage of ray tracing and DLSS, features that were initially gimmicks but were finally becoming useful. There was only one question remaining: how would AMD respond?

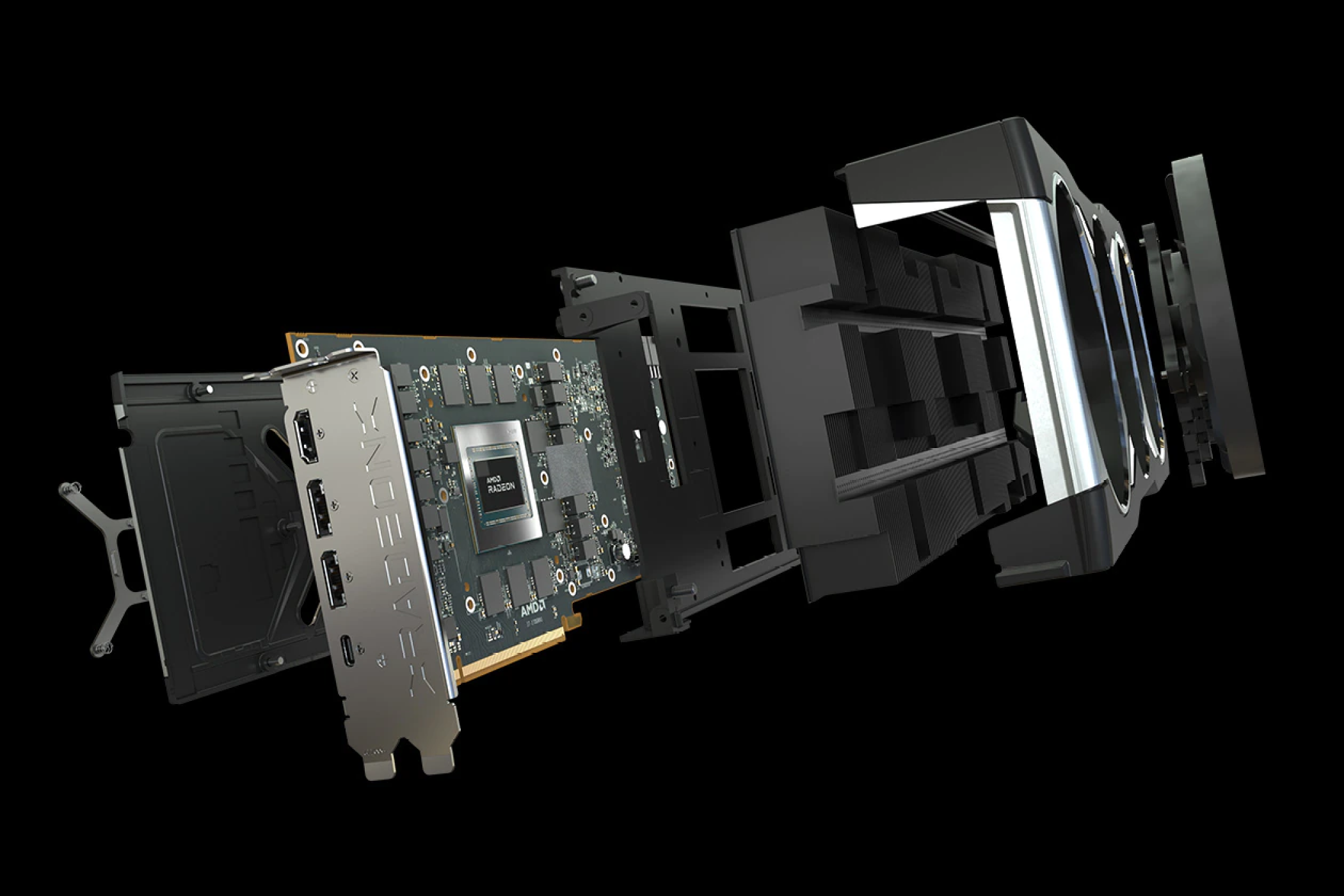

AMD Radeon RX 6800 XT: No longer the budget alternative

Ever since 2014, AMD has been the budget alternative, the brand you went to when you needed the best value and didn't care about features or power consumption or bugs. Every time AMD had tried to take the fight back into the high-end, Nvidia was always a step ahead. But in 2019, AMD had finally regained the node advantage by using TSMC's 7nm process. Because Nvidia chose to use Samsung's 8nm, that ensured AMD's competing chips would have a technological advantage, not to mention the fact that AMD's engineers were also becoming very good at building 7nm processors.

Two months after the RTX 3090 and 3080 launched, AMD launched the RX 6900 XT, 6800 XT, and 6800. All eyes were on the $650 6800 XT, which looked poised to go head-to-head with the 3080. The PC enthusiast community waited for two months for these new GPUs to finally drop. On November 18, 2020, AMD finally returned to the high-end. The 6800 XT could match the performance of the 3080, a significant achievement as AMD had always been a year behind Nvidia ever since the GTX 900 series. It wasn't a decisive win for AMD, but a slight victory was better than none.

Although, it wasn't that simple. The infamous GPU shortage began in 2020 and persisted up until early to mid-2022. That meant the MSRPs didn't really matter, and the end result was that neither the 6800 XT nor the 3080 were available at acceptable prices. But when the shortage began to wind down, AMD cards began to sell at MSRP but Nvidia GPUs didn't. In fact, today you can find 6800 XTs going for less than $600, while the 3080 rarely goes for less than $700.

Pricing is a significant factor in why the 6800 XT and the whole RX 6000 series are so easy to recommend, despite the lack of good ray tracing performance and cutting-edge features. In the here and now, it really looks like AMD won in the end, but again, it's not that simple.

The dystopian future of GPUs

Nvidia opened Pandora's box in 2018 when it launched the RTX 20 series. It introduced an era of GPUs with four digit price tags, GPUs that didn't bring meaningful performance improvements, and GPUs that only really stand out when using new technologies of sometimes dubious value. For a brief moment in 2020, we all thought that maybe Nvidia and AMD were about to inject some much-needed energy into the market, but it's become clear that neither company wants to turn back the clock.

In 2023, Nvidia has a flagship that costs more than a midrange PC, a midrange card that got canceled a month before launch due to consumer fury, and mobile GPUs that have terrible names. Meanwhile, AMD is winning in the sub-$300 segment literally only because Nvidia hasn't competed there since 2019 and its own flagship GPU is well behind Nvidia's. It's just a disaster no matter how you look at it and as long as these two companies hold the keys to PC gaming, you'll have to pay them whatever they deem fit.