Google's mobile development platform, Firebase, is getting its biggest update this year at Google's annual developer conference, Google I/O. Today, Google announced new ways they're improving the accessibility of machine learning for developers; Google is also extending its performance monitoring tools to help web developers speed up their web apps.

Google announced ML Kit at last year's I/O to take the mystery out of machine learning for developers. They started out with a couple of APIs for the most common use cases, and this year they're expanding the SDK with the addition of 3 new APIs: an on-device API for translation, an API for object detection and tracking, and an API to easily create custom ML models. Native app developers can integrate the Performance Monitoring SDK into their app to collect performance data which they can then analyze in Firebase Performance Monitoring; soon, web developers will also be able to track the performance of their web apps in Firebase. I spoke with Francis Ma, Head of Product at Firebase, to learn more about these changes.

New ML Kit APIs

Google's ML SDK currently supports 7 APIs: text recognition, face detection, barcode scanning, image labeling, landmark recognition, smart reply, and language identification. The last 2 were only recently added in April, but now they'll be joined by the 3 aforementioned APIs. Here's a high-level summary of the 3 new ML APIs for developers:

- On-device API for translation: Using the same model that powers the Google Translate app's offline translation, this new API allows developers to provide fast, dynamic translations between 58 languages.

- Object detection and tracking API: This API lets an app locate and track the most prominent object, marked by a box around it, in a live camera feed. Developers can then identify the most prominent object by querying a cloud vision search API. As an example, IKEA is said to be experimenting with this API for visual furniture shopping.

- AutoML Vision Edge: For developers who want a custom ML model with minimal expertise needed, AutoML Vision Edge lets you build and train your own custom model to run locally on a user's device. To train a model, one simply uploads their database (eg. a set of images) to the Firebase console and click "train model" to train a TensorFlow Lite model against the database. Google announced that a company called Fishbrain used this API to train a model to identify the breed of a fish, while another company called Lose It! trained a model to identify the categories of food in an image.

Machine learning is a quickly growing field in computer science, so it's natural for developers to show interest in it. However, building and training ML models effectively without a data scientist on staff can be difficult, which is why Google is simplifying the process by automating the training of models with ML Kit. Developers can focus on building new apps with powerful functionality using the power of ML without having to devote significant time and effort into learning data science. With the addition of these 3 new APIs in ML Kit, we'll hopefully see a lot of new useful apps in Google Play.

Firebase Performance Monitoring for Web Developers

Consumers demand good performance from the apps and websites they use, but Firebase has thus far only provided native app developers with the means to effectively monitor the performance of their products. At Google I/O 2019, Google announced that Firebase Performance Monitoring will be made available for web developers using Firebase Hosting. Web developers can keep users engaged on their platforms by improving the speed of their web apps; to help web developers spot the key weaknesses in their sites' performance, Firebase will provide web-centric tools and telemetry measurements to show how real-world users experience a website. For instance, web developers will be able to monitor aspects like the time to first paint and input delay, how soon people first see and interact with content on a web page, and the mean latency. The overview dashboard will show these and other metrics to help web developers optimize the experience for their users, whether by country or globally.

Other Announcements

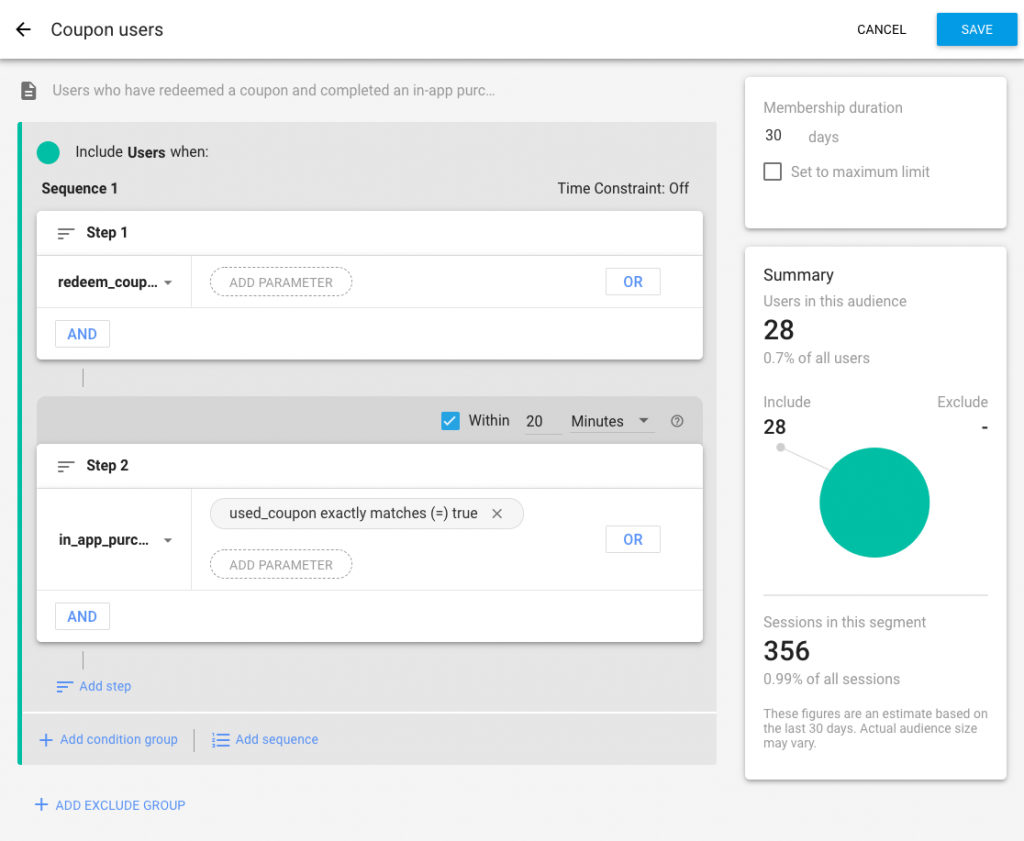

Updated Audience Builder in Google Analytics for Firebase

Building targeted audiences is critical to maximizing user engagement. You want to make sure you're segmenting your users into the right categories so you know how best to target them with personalized incentives and encouragement so they're more likely to continue using your app or service. Google Analytics for Firebase helps developers better understand their users, and its updated audience builder will make it easy to create new audiences for targeting through Remote Config or re-engagement through In-App Messaging. The updated audience builder features include features like "sequences, scoping, time windows, [and] membership duration." As an example, Google says it's now possible to create an audience for users who redeem a coupon code and purchase a product within 20 minutes of coupon redemption.

-

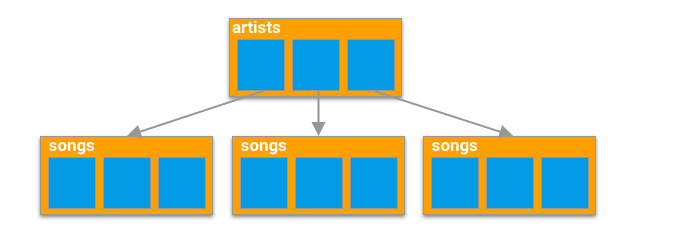

Cloud Firestore, a fully-managed NoSQL database, gets support for Collection Group queries which allows your app to "search for fields across all collections of the same name, no matter where they are in the database." Collection Group queries will, for example, allow a music app with a data structure consisting of artists and their songs to query across artists for fields in the songs regardless of the artist.

- The new Cloud Functions emulator will let developers speed up local app development and testing; it communicates with the Cloud Firestore emulator.

-

If you need to debug crashes in your app, then Firebase Crashlytics can help you diagnose any stability issues. The velocity alert tells you when a particular issue has suddenly increased in severity and is worth looking into, but its alert threshold could never be customized until now.

For more news on Firebase, stay tuned to the official blog or join the Alpha program to get a preview of upcoming features.