At its annual Search On event today, Google talked about several new features coming to Google Search later this year. In addition, the company also showcased a new feature for Google Lens, which utilizes machine learning and augmented reality technologies to deliver a more seamless real-time translation experience.

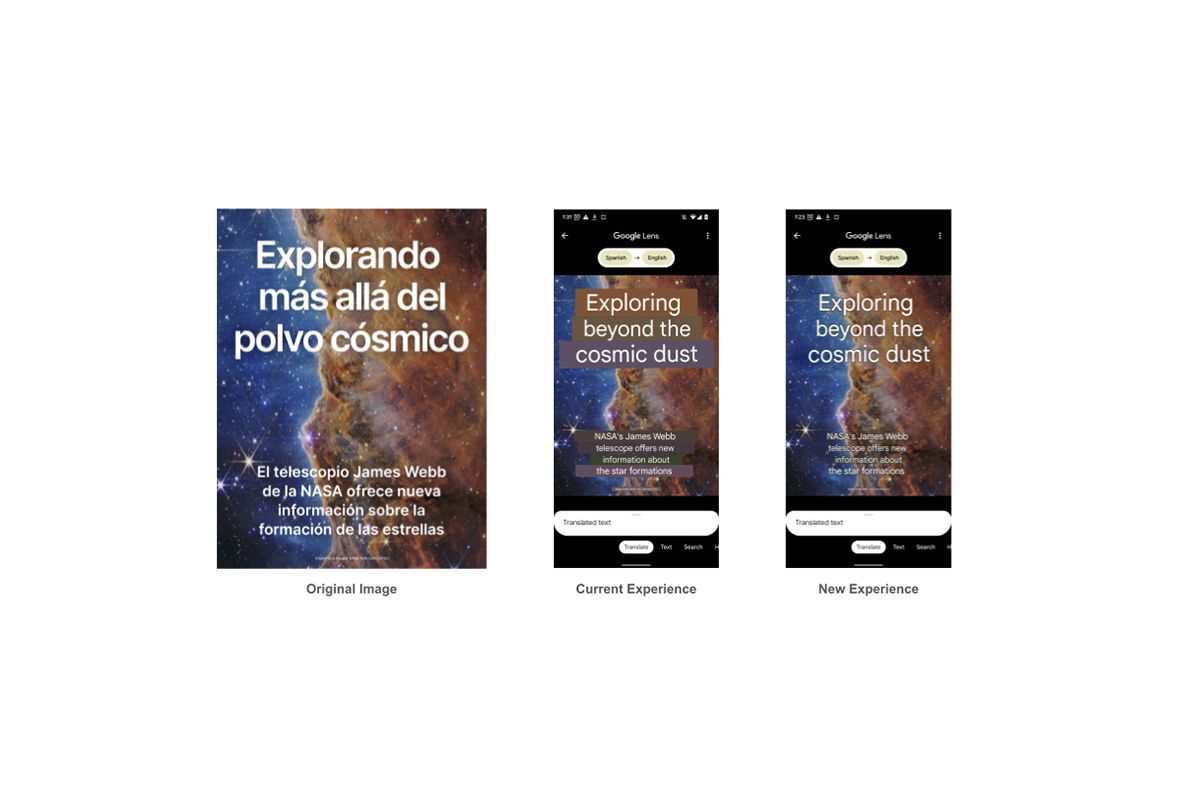

Google Lens has offered support for real-time translations for quite a while. However, in its current state, the feature covers up the original image to display the translated text, giving it an ugly appearance. With the updated Google Lens AR Translate experience, Google aims to make real-time translations look more natural and seamless.

As you can see in the attached image, the new Lens AR Translate experience doesn't show any unsightly bars on the original image. That's because it utilizes machine learning to erase the original text, recreate the pixels underneath with an AI-generated background, and then overlay the translated text on top. The resulting image looks far more seamless.

While it may seem like this new experience will slow down Google Lens' real-time translations, Google assures us it won't. The company claims that the feature uses optimized machine learning models to erase the original text, recreate the background, and overlay the translated text in just 100 milliseconds. Google uses generative adversarial networks (AKA GAN models) to achieve this feat, which is the same technology that powers the Magic Eraser feature on Pixel devices.

Sadly, you will have to wait a while to try out the new Lens AR Translate experience. Google says the experience will roll out to users later this year, but the company has not provided a definite release timeline. At the moment, it's unclear whether the new experience will be available on Google Lens for all platforms.

What do you think of the new Lens AR Translate experience? Are you looking forward to trying it out? Let us know in the comments section below.