At Google's Search On event this year, the company announced a number of big improvements to Google Lens. These improvements are for iOS, Android, and even on desktop. Google Lens, Google’s AI-powered image recognition service, was rolled out to Android phones back in 2017 as a spiritual successor to Google Goggles. However, until this year, it remained exclusively available on mobile phones. Google Lens also got a Material You update only recently. These three improvements are all pretty big changes to the platform, particularly as Google introduces more and more AI-based enhancements.

Google Lens improvements

Multitask Unified Model (MUM) enhancements

This year, Google announced the Multitask Unified Model, or MUM, at I/O. Google says that MUM can simultaneously understand information across a wide range of formats, like text, images, and video. It can also draw insights from and identify connections between concepts, topics, and ideas about the world around us. The company shared an example of being able to select a pattern on a shirt in a Google search and then trying to find that same pattern on socks. This feature will be launching on Google Lens in the coming months.

Google Lens on Desktop

A few months ago, Lens on Desktop was spotted when right-clicking images. It was a server-side switch that needed to be enabled, and not everyone had it. Now Google is rolling out the feature to everyone over the coming months. Images, video, and text content on a website can be searched with Lens to quickly see search results in the same tab.

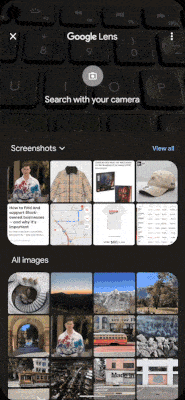

Lens Mode on iOS

All images in the Google app on iOS are now also searchable on Lens. Google calls this "Lens Mode", and it allows you to search shoppable images on websites as you browse in the iOS Google App. This feature is limited to the U.S. currently, and there's no word of a global release just yet.