Following yesterday's main presentation at Google I/O 2021, the company held several sessions that are now available on-demand via YouTube. One of the sessions covered what's new in machine learning for Android and how Google is making it faster and more consistent for developers.

Machine learning is responsible for powering features Android owners use every day, from background blur in images, background replacement in video calling apps, and live captioning in calls on Pixel phones. While machine learning is becoming more and more advanced, Google said there are still several challenges in deploying ML-powered features, including concerns with app bloat and performance variation. There are also issues with feature availability because not every device has access to the same APIs or API versions.

Image: Google

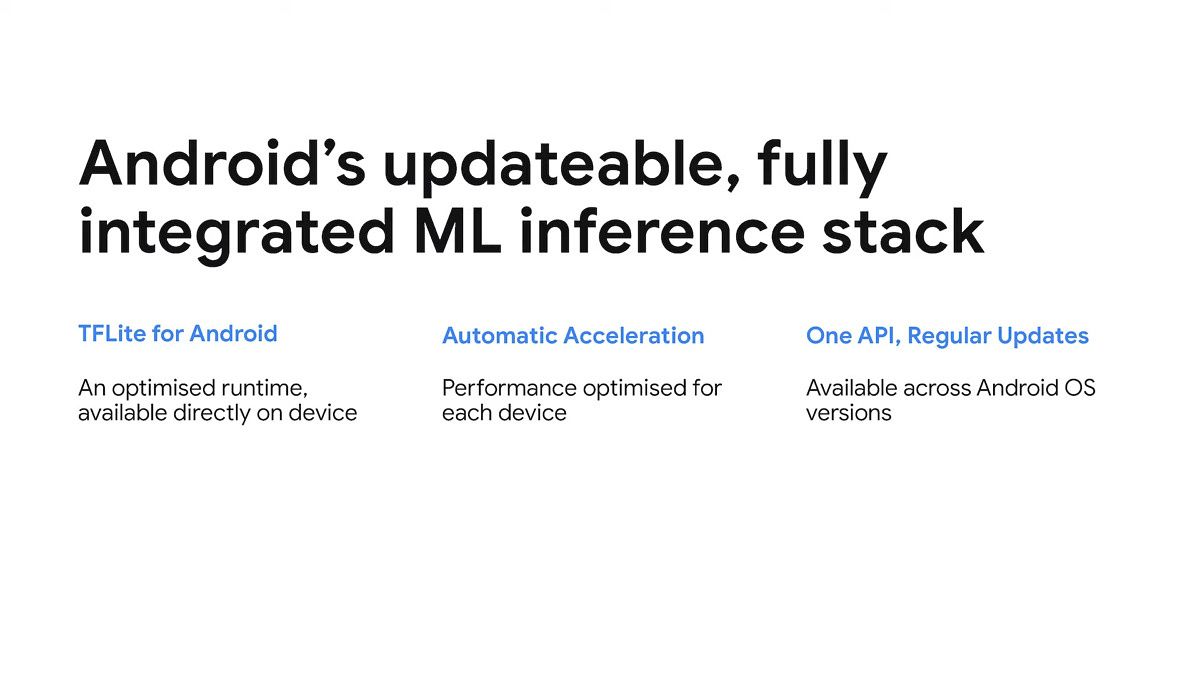

To solve this, Google is announcing Android's updatable, fully integrated ML inference stack so there will be a set of common components across all devices that just work together. This brings the following benefits to app developers:

- Developers no longer need to bundle code for on-device inferencing in their own app.

- Machine learning APIs are more integrated with Android to deliver better performance where available.

- Google can provide a consistent API across Android versions and updates. Regular updates to APIs come directly from Google and exist independently of OS updates.

Image: Google

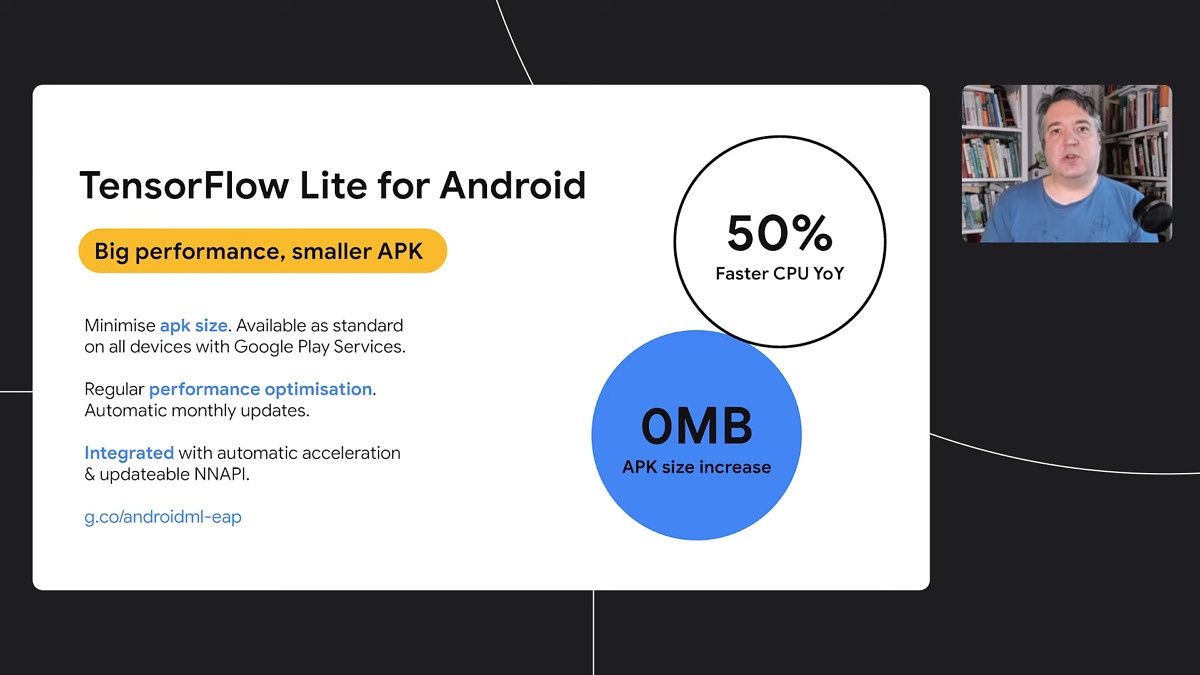

To make this happen, Google is doing a few things. First, it said that TensorFlow Lite for Android is going to be preinstalled on all Android devices through Google Play Services so developers will no longer need to bundle it with their own apps. Google is also adding a built-in allowlist of compatible GPUs on Android that can be used for hardware acceleration. The search giant is also introducing “automatic acceleration” that takes a developer’s machine learning model into account and can check whether the model works better accelerated on the CPU, GPU, or other accelerators.

Image: Google

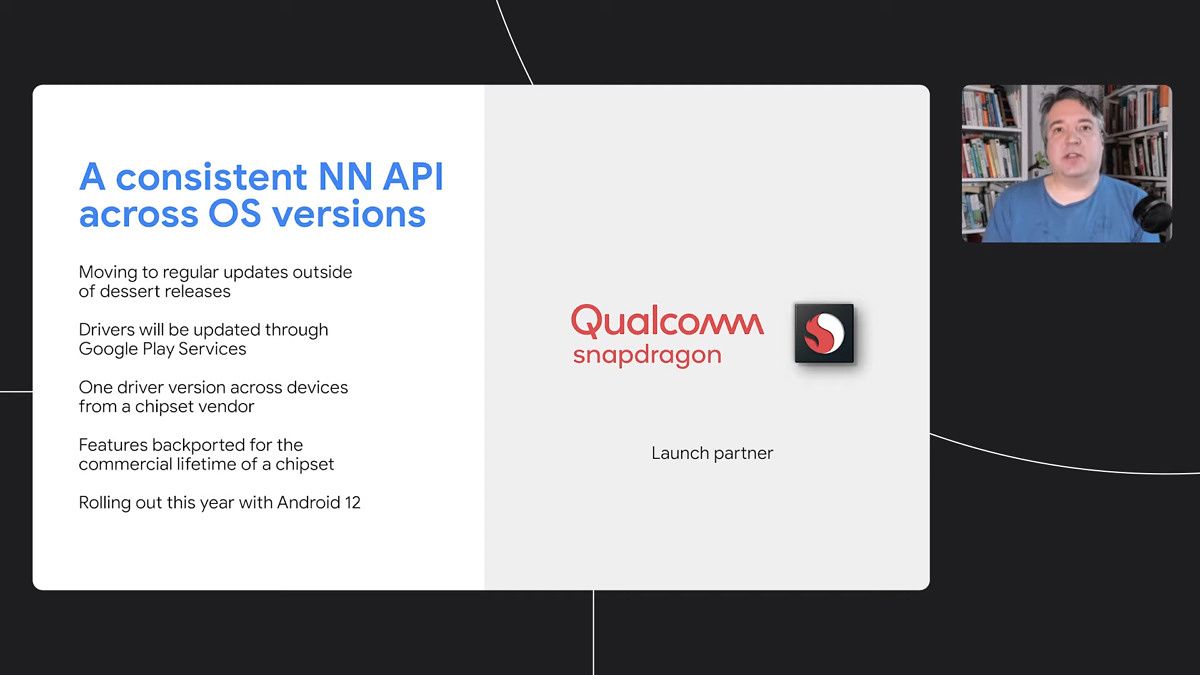

Next, Google also said it’s moving NNAPI away from the core OS framework so that it's updatable through Google Play Services. That means developers can use the same NNAPI spec even if two devices are running different Android versions. Notably, the NNAPI runtime was added as a Mainline module in Android 11, which is possibly how these updates are being delivered. Google is working with Qualcomm to make updatable NNAPI drivers available on devices running Android 12, and new features will be backported for the commercial lifetime of a chipset. Furthermore, updates will be regularly delivered and will also be backward compatible with older Snapdragon processors as well.

Improvements to machine learning are just a tiny fraction of what Google announced this week. The search giant unveiled a major redesign in Android 12 and also shared the first details about its collaboration with Samsung to revamped Wear OS.

\r\nhttps://www.youtube.com/watch?v=uTCQ8rAdPGE\r\n