There has been a duopoly in consumer graphics cards for far too long. AMD and Nvidia have had the market all to themselves. Things are getting more powerful, but also more expensive. Enter player three, Intel, with its first lineup of dedicated GPUs in two decades. The flagship release is this, the Intel Arc A770 Limited Edition.

There will be third-party versions of the Intel Arc GPUs, but this one is the first out of the gates from the manufacturer itself. The "Limited Edition" comes with one key spec difference to what we expect Intel's partner cards will have. This one has a whopping 16GB of VRAM and only costs $349. That in itself is staggering.

Intel claims that this first attempt and its sister release, the A750, are in the ballpark of Nvidia's RTX 3060. A mid-range but still very capable GPU. That kind of performance and being able to undercut on price makes for a tantalizing headline, but how does it actually transpire in practice? Is the Arc A770 any good? And importantly, is it good enough to compete with the big dogs in the yard?

The answer is yes, but with some caveats. But this is only the beginning.

Intel Arc A770 Limited Edition

Inte's first flagship consumer GPU in two decades brings a much needed third-player to the table at an incredible price

Navigate this review

- Intel Arc A770 pricing and availability

- Intel Arc A770 specs and hardware

- Test Bench specs

- Intel Arc A770 gaming performance

- Intel Arc A770 encoding

- Who should buy the Intel Arc A770

About this review

This review was conducted using a pre-release sample of the Intel Arc A770 Limited Edition as provided by Intel. All performance data gathered is our own and nobody at Intel has seen or had any input into the contents of this review.

Intel Arc A770 pricing and availability

The Intel Arc A770 Limited Edition is set to become available from Intel on October 12 at a retail price of $349. The recommended price for cards with 8GB of VRAM compared to the 16GB available in the Limited Edition is $329.

Intel isn't commenting on the availability of third-party versions of the A770, but we've already seen one confirmed product from Acer's Predator Gaming brand. This one doesn't have pricing or availability at this time.

Intel Arc A770 specs and hardware

The specs for the Intel Arc A770 Limited edition are shown in the image above, which also compares to the sister card, the A750 (review coming soon). The only difference between this and other versions of the A770 is that it has 16GB of VRAM. Third parties are expected to mostly go with 8GB. All other specs remain the same.

We took a look at the hardware previously in our first unboxing, and it's a little surprising just how nice the A770 is. As a piece of hardware design, it's exquisite. The entire design is screwless, save for the ones required to actually fix the I/O plate to the card. It's subtle and stylish, and on the A770 you have a splash of RGB to remind you that you're a gamer.

This is the best looking graphics card in some time

The backplate is smart, and the whole thing is covered in a stealthy matt black finish. I'm not sure if it's better or worse that it has a soft-touch feel, but it's got it regardless. As a piece of hardware, this feels like something that costs much more than it does.

On that front Intel absolutely nailed it with the A770 and indeed with the A750. This is probably the best-looking graphics card we've seen in a while.

The RGB is controlled through a dedicated app on the PC, but only if you connect the little cable in the box to a USB header on your motherboard. It's probably my only gripe with the exterior. The cable is just about long enough to feed through tidily, but it's another cable dangling off the front of the card. Picky, perhaps, but it's something else to try and make look presentable. It could have been better on the end, maybe.

The A770 has four display outputs which include both DisplayPort and the latest HDMI 2.1. It also comes with a companion app, Intel Arc Control, to manage drivers, system monitoring, and performance tuning.

Test bench specs

As tempting as it is to pair the A770 with the most expensive, fastest CPU around, the absolute fastest memory and such, that sort of defeats what kind of build this graphics card is targeted at.

There will be a time when we'll do that, when the 13th Gen Core i9-13900K is here we'll put together an "Intel super build" to see what happens there. But the A770 will appeal most to those on a more modest budget, so for testing, I've slotted it into my modest gaming PC build as below.

- Intel Core i5-11600K

- 32GB GSkill Trident DDR4-3200

- Intel Arc A770 16GB

- Crucial P5 Plus PCIe 4.0 SSD

All games tested were loaded from a Crucial MX500 SATA SSD.

What this test system does mean is that for now we're mostly focused on gaming. Because I don't have a 12th Gen Intel CPU handy, tools like Deep Link and Hyper Encode are off limits, for now. For those, you either need a 12th Gen or 13th Gen Intel CPU with integrated graphics. We'll update the review according after the 13th Gen drops with more details on these features.

It's also worth noting that you need to enable Resizeable Bar on your motherboard. Intel as good as says the A770 will be garbage without it, so before beginning dive into your UEFI/BIOS. Most modern motherboards should have it, if not you might need to install an update. But Intel's companion software will warn you if you don't have it enabled, so take that as read that it needs to be on.

Intel Arc A770 gaming performance

When it comes to gaming there's more than just the GPU to talk about. Intel also has its own upscaling tech now, known as XeSS. In simple terms, you can think of it like Nvidia DLSS or AMD's FSR. It renders frames at a lower resolution, passes them through its engine, and upscales them back to whatever resolution you're playing at. It's not quite the same as playing at native resolution, but the idea is that it's close enough in detail while giving your frames per second a nice little boost.

XeSS isn't exclusive to Intel Arc, either, and for comparison's sake, I tested it where possible on my Nvidia GeForce RTX 2080. The Arc A770 is positioned around the RTX 3060, but alas, my unit has display output issues. But the RTX 2080 is similar in gaming performance in my experience, though not an apples-to-apples comparison.

XeSS doesn't quite have the coverage of DLSS or FSR, but it's rolling out in a few games already. Shadow of the Tomb Raider and Death Stranding: Directors Cut have recently been updated, and Intel provided access to early builds of Ghostwire Tokyo and Hitman 3 that will be joining the party soon.

Synthetic benchmarks

Using the 3DMark suite of graphics benchmarks, the table below shows relative performance compared to the 2080 in Fire Strike, Time Spy, and the DirectX Ray Tracing benchmarks. In all of these higher is better.

|

Benchmark |

Intel Arc A770 16GB |

Nvidia RTX 2080 |

|---|---|---|

|

Fire Strike Ultra |

7,106 |

6,351 |

|

Time Spy (DX12) |

13,412 |

10,810 |

|

Time Spy Extreme (DX12) |

6,334 |

5,032 |

|

DirectX Ray Tracing |

31.5 FPS |

20.75 FPS |

In all of these benchmarks, the new A770 handily outdoes one of Nvidia's older, but still potent GPUs. But it's less clear-cut when you get into gaming.

XeSS games

Let's break out the games with XeSS separately from those that don't have it. So for this, we'll be looking at four titles: Shadow of the Tomb Raider, Hitman 3, Ghostwire Tokyo, and Death Stranding.

The tables below represent the performance across both the reference RTX 2080 and the Arc A770. All games were run on their respective highest graphical settings.

|

Game |

Intel Arc A770 16GB |

Nvidia RTX 2080 8GB |

|---|---|---|

|

Shadow of the Tomb Raider |

|

|

|

Hitman 3 |

|

|

|

Ghostwire Tokyo |

|

|

|

Death Stranding Directors Cut |

|

|

There are some things to consider with these results, not least that it does appear that XeSS is still very much a work in progress. Death Stranding is an obvious outlier, in my testing yielding a lower average frame rate with it turned on than when playing without it. The results it yields on the RTX 2080 are also mightily impressive, but not without its own issues. The Arc A770 seems much better suited to XeSS, capable of running in all four of these titles at its ultra-quality setting without tearing and less artefacting than on the RTX 2080. The Nvidia card also experienced a lot of screen tearing (with Vsync off).

Ultimately DLSS where available still seems to have the edge, and if you have an Nvidia card you should probably always choose it. But on the Arc A770, it's (mostly) worth enabling. It gives good gains in some games, Hitman 3 especially, and helps out enough that you can actually try and use ray tracing.

XeSS is still brand new and will continue to improve, but paired with the A770 it's impressive. On ultra settings, you really have to look hard to notice any image quality issues. At 1440p it looks to the casual eye every bit as good as when it's turned off. Step down to performance settings and you notice a few more issues, such as the odd flicker or mild artefacting, but I'd argue the gains are worth it.

Non-XeSS games

For now, XeSS isn't even totally necessary on the Arc A770. 1080p performance is exceptional and when you step up to 1440p you're still capable of maxing out the graphics and enjoying good frame rates. Again, with some caveats that we'll get to below.

In this section we're looking at Metro Exodus: Enhanced Edition, Forza Horizon 5, Marvel's Spider-Man Remastered, and World War Z Aftermath.

|

Game |

Intel Arc A770 16GB |

Nvidia RTX 2080 8GB |

|---|---|---|

|

Marvel's Spider-Man Remastered |

|

|

|

Forza Horizon 5 |

|

|

|

Metro Exodus: Enhanced Edition |

|

|

|

World War Z |

|

|

The Arc A770 shone particularly well in Forza Horizon 5 and Metro Exodus: Enhanced Edition. The latter won't even run on a GPU without ray tracing capabilities and it actually runs better than my Nvidia card. Spider-Man is also perfectly playable at 1440p, weirdly at similar FPS to 1080p, with the graphics maxed out, though if you add ray tracing into the mix you see a spot of freezing and around a 20 FPS drop.

World War Z was the only real bust, with dreadful DX11 performance and for some reason an inability to select Vulkan. The Arc A770 supports Vulkan on the hardware level and works perfectly well in other titles such as Doom. Intel's aware of the issues I've seen, though, and is looking into fixes. Control Ultimate Edition is also an oddity. The Arc A770 supports both DX12 and DXR ray tracing, but the game refused to enable it, even when manually launching the DX12 executable. If the game was running in DX11 mode it would at least explain the pretty bad performance. But there will be teething issues.

Without hardware DX9 support it's anyone's guess as to how your games will play

DX11, and indeed even DX9 is where the A770 starts to look a little less impressive. We already knew prior to launch that Intel was pulling hardware support for DX9, instead relying on the DX12 emulation of it. But early tests in mid-2022 expressed concerns about DX11 performance, too. Aside from the aforementioned WWZ, I haven't come across any absolute game-breakers that run on DX11, but you can feel the difference compared to playing a DX12 or Vulkan game.

Take The Witcher 3, for example. The A770 will let you max it out at 1440p and you'll get frame rates between 80-90 FPS. But it's also fairly inconsistent, remedied either by turning the graphics down or by imposing a 60 FPS cap. DX9 is where the rollercoaster takes another turn because you're now relying not on Intel, but Microsoft, for it to even work at all.

Running Borderlands 2 was fine, though again, wildly inconsistent frame rates. And not nearly as fast as you would perhaps think from an older game. But it works and is totally playable. The same can't be said of Batman: Arkham Asylum, though. Things start out ok, but a couple of minutes into the game you get a complicated error message and a total crash.

The bottom line is that you should be OK in DX11 games, maybe even DX9 games. But it's not guaranteed. The lack of DX9 support is understandable, it is, after all, really old. But there are still a lot of popular games using DX11 and I hope that Intel will continue making that better. If a game supports DX12 or Vulkan then you're in great hands with the Arc A770. Performance is extremely good for a $349 card in newer games. And arguably that's what's most important.

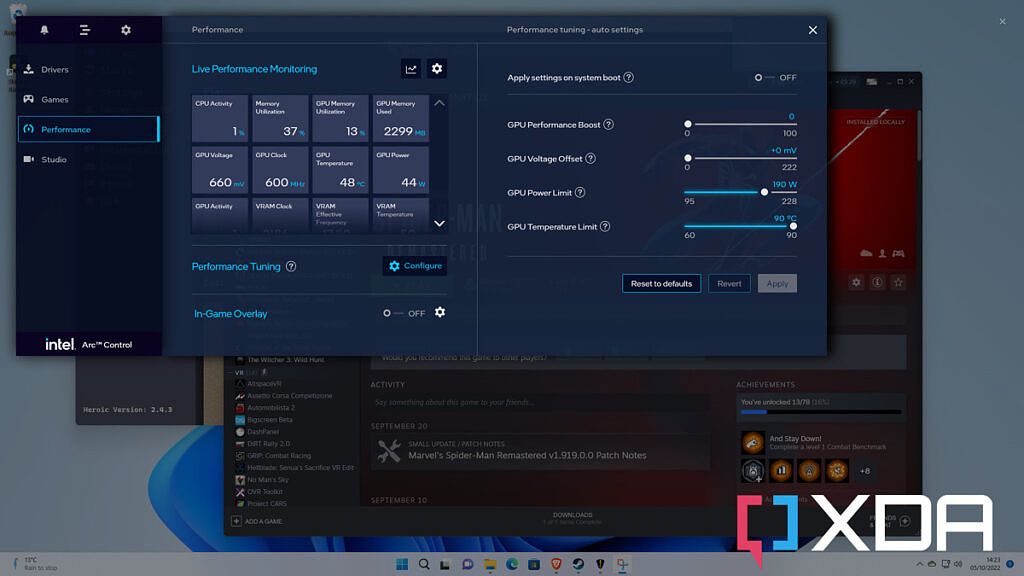

Performance tuning

All the benchmarks run above were with the Arc A770 in its stock settings. When you first turn it on the power limit will be set to 190W and there's zero tuning. You can of course tinker with this yourself using Arc Control. There is definitely a little more performance on the table, but whether it's worth it or not I'm not so sure.

You see some gains in synthetic benchmarks, sure. By raising the power limit to the 225W the A770 is rated for you can make your Fire Strike Ultra score go up a bit. Use the performance boost slider and put it up to 20 you can increase it by a couple of hundred points. But you're also increasing power use, though it should be said not heat. The Arc A770 has sound thermals, and the most I've been able to push it to is 75C with these mild performance tweaks. The absolute limit is 90C so there's room to play around if you don't mind things a little toasty.

In games, you could get a 10-15 FPS boost with these same settings. Hitman 3 without ray tracing on responded the best, while gains in Shadow of the Tomb Raider were minimal. It'll be a game-by-game basis, but if you need a hand getting a few extra frames, it's there for the taking.

Intel Arc A770 encoding

One of the big draws of the Arc GPUs to many is its inclusion of hardware AV1 encoding. This even applies to the entry-level Arc A380. Given their price, one or two content creators are surely casting an eye in Intel's direction.

AV1 is still very much a new kid on the block, though. Software such as DaVinci Resolve now has support for hardware AV1 encoding on the A770, and it's being included in OBS as well. Arc can also make use of Intel's Deep Link and Hyper Encode technologies, which allow the GPU to work in harmony with the integrated graphics on your Intel CPU. You need a 12th Gen or higher for that, which I currently don't have, so we'll have to wait a few weeks to try that out.

The tech is sound, and it seems to work. In DaVinci Resolve I rendered a 4 minutes 30 seconds 4K60 clip at 40000 bitrate using the hardware AV1 encoder in just under 7 minutes and utilized 10GB of that 16GB VRAM. The resulting file looked as good as an h.264 encode but physically ended up around 200MB smaller. In my, admittedly limited, testing in Resolve, AV1 didn't offer much of a speed increase in AV1 encoding vs h264 using Quick Sync.

We've got a full explainer on why AV1 is a big deal and you should definitely give that a read. Nvidia is following close behind with hardware AV1 encoding on the RTX 40-series but Intel is just about first to the table. Though if you count the Arc A380 as well, Intel really was first. If it matters to you, the A770 is a good choice.

\r\nhttps://www.youtube.com/watch?v=b7-S8S-8s_Y\r\n

This sample clip was recorded in OBS at Twitch quality settings (1080p, 60 FPS, 6000 bitrate) using the A770. The settings are a little awkward, but using the hardware QSV encoder seems to result in good quality footage while taking the burden off the rest of the system. XSplit's Gamecaster also has support for AV1, but until you can realistically stream using it the applications are limited for gamers. Still, there's nothing wrong with being ready for the future.

Who should buy the Intel Arc A770?

So, the million-dollar question: Should you buy one? That's a little more complicated than a simple yes or no.

You should buy if

- You're building a gaming PC on a tighter budget

- You're looking for AV1 encoding

- You're looking for a card with a lot of VRAM

You shouldn't buy if

- Ray tracing is important

- You want to play older games

- You're not prepared for some teething problems

On one hand, finally, there's a third player in the GPU market. And Intel is keen to do what it can to help bring prices down. If you're already dreaming of the $1,600 RTX 4090, this isn't going to come close to that. But it's in a more important space, it's in the part of the market that the masses are buying.

We were promised RTX 3060 levels of performance and for the most part, Intel has delivered on that promise. You're covered up to 1440p with high frame rates, high details, and a generally good time in newer games. This is a GPU built for the future, not the past. And while it can handle ray tracing, sometimes with astonishing results, this is a mid-range card. If ray tracing is important, you'll still really want to spend more.

As such if you have a library of older games your mileage will vary wildly. AMD and Nvidia definitely have the edge here, but those companies also have many, many years of experience. This is year one for Intel Arc, and you can't really criticize Intel too much for looking forward, not back.

You will have to be open to such issues and other teething problems, though. During the time of this review, Intel has already updated the driver twice, and all signs point to there being plenty more to come after launch. A few rough edges aside, it hasn't been nearly as bad as some corners of the internet had predicted it to be.

It's hard to say you should definitely rush out and buy one, though I do firmly believe it's worth it. For one, if you're mad that the choice is usually between AMD and Nvidia, get out there and support the new guy. Without support, it'll never be a success. But the Arc A770 isn't a bad graphics card. It looks incredible inside my PC and the performance is exactly right for what I generally want from a GPU. I don't game higher than 1440p and as long as it looks good and is stable, I'm happy.

If that sounds like you, then give it a shot. For a first-generation product, the Arc A770 is pretty damn good, especially for $349. And this is just the beginning. Welcome to the party, pal.

Intel Arc A770 Limited Edition

RTX 3060-esque performance, ray tracing, XeSS, DisplayPort 2.0 and HDMI 2.1 in a neat little package for $349. Oh and it has 16GB of VRAM.