Android 12 brought with it a ton of new features, and one of the most mysterious is the Private Compute Core (PCC). It's essentially a place where sensitive data can be processed on-device, away from where everything else is happening. It powers Google Pixel 6 exclusive features such as Now Playing, Live Caption, and Smart Reply, but for a long time, there wasn't a lot of information about how it works. We were forced to guess and try to figure it out ourselves.

Google said a long time ago that it would open-source the code for Private Compute Services (PCS) so that independent security researchers could audit it. It finally released that code at the end of 2022, alongside a technical white paper detailing how it works. Private Compute Services is said to provide a privacy-preserving bridge between the PCC and the cloud, making it possible to deliver new AI models and other updates to sandboxed machine learning features over a secure path. Google says communication between features and PCS happens over a set of purposeful open-source APIs, removing identifying information from data and applying privacy technologies like Federated Learning, Federated Analytics, and Private information retrieval.

Google, in its own words, had this to say:

[The PCC] is a secure, isolated data processing environment inside of the Android operating system that gives you control of the data inside, such as deciding if, how, and when it is shared with others. This way, PCC can enable features like Live Translate without sharing continuous sensing data with service providers, including Google. PCC is part of Protected Computing, a toolkit of technologies that transforms how, when, and where data is processed to technically ensure its privacy and safety.

Private Compute Core is a virtual sandbox

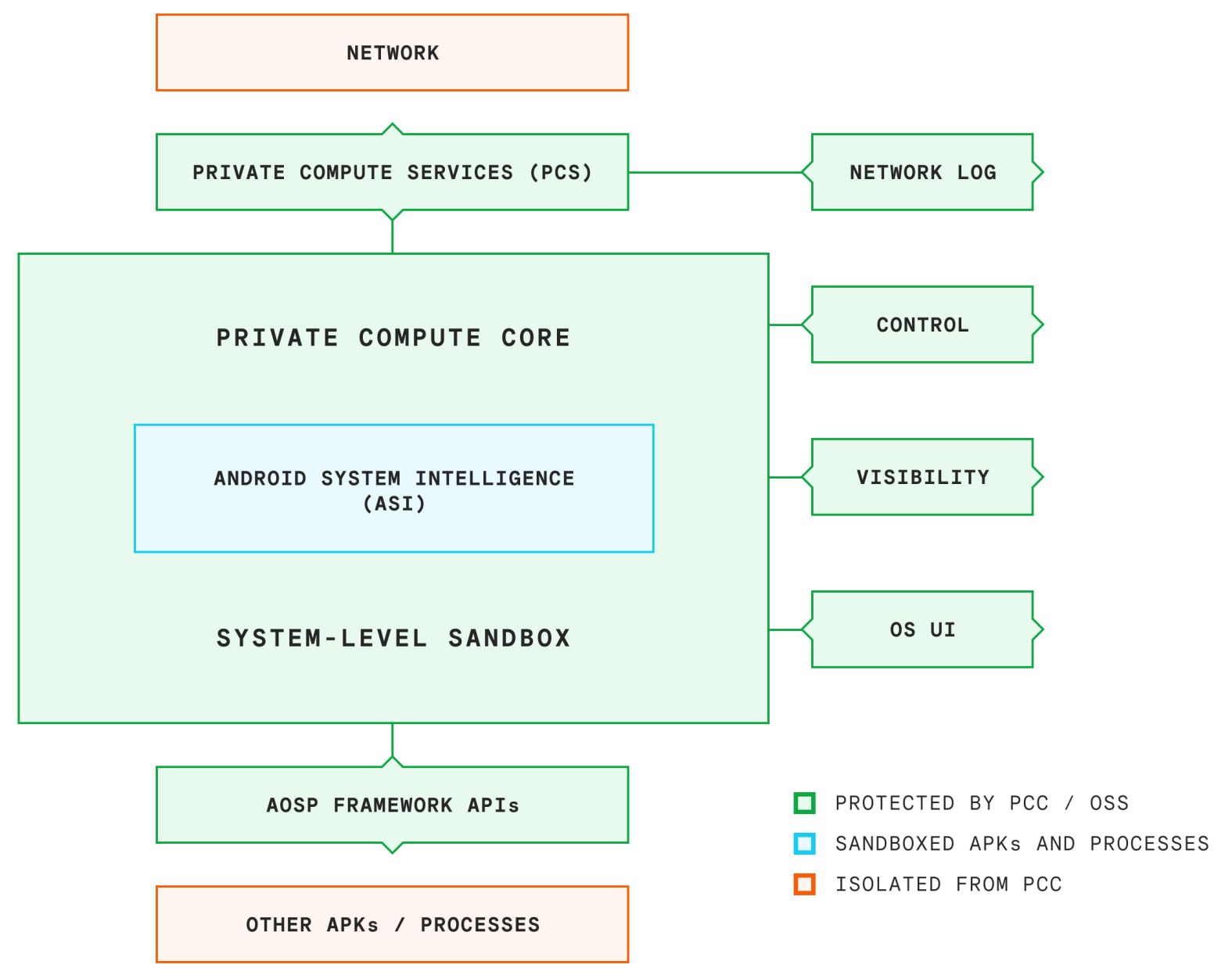

Now that we have the basics down, what exactly is the PCC? Google has now given some technical details of its architecture and how it exists in its own isolated virtual sandbox. Features can run inside that sandbox and process OS-level or ambient data, and the results are shown to the user via either the trusted operating system or through access-controlled open-source framework APIs.

In essence, it’s a sandbox for features that might process sensitive information. Smart Reply obviously scans your messages, while Live Caption listens to whatever is being played. Now Playing also listens to audio around you. These features are housed inside of Android System Intelligence, and it relies solely on PCS for connections outside this sandbox. PCS allows for the following:

- Federated learning and federated analytics

- Private Information Retrieval (PIR)

- HTTPS download-only transport

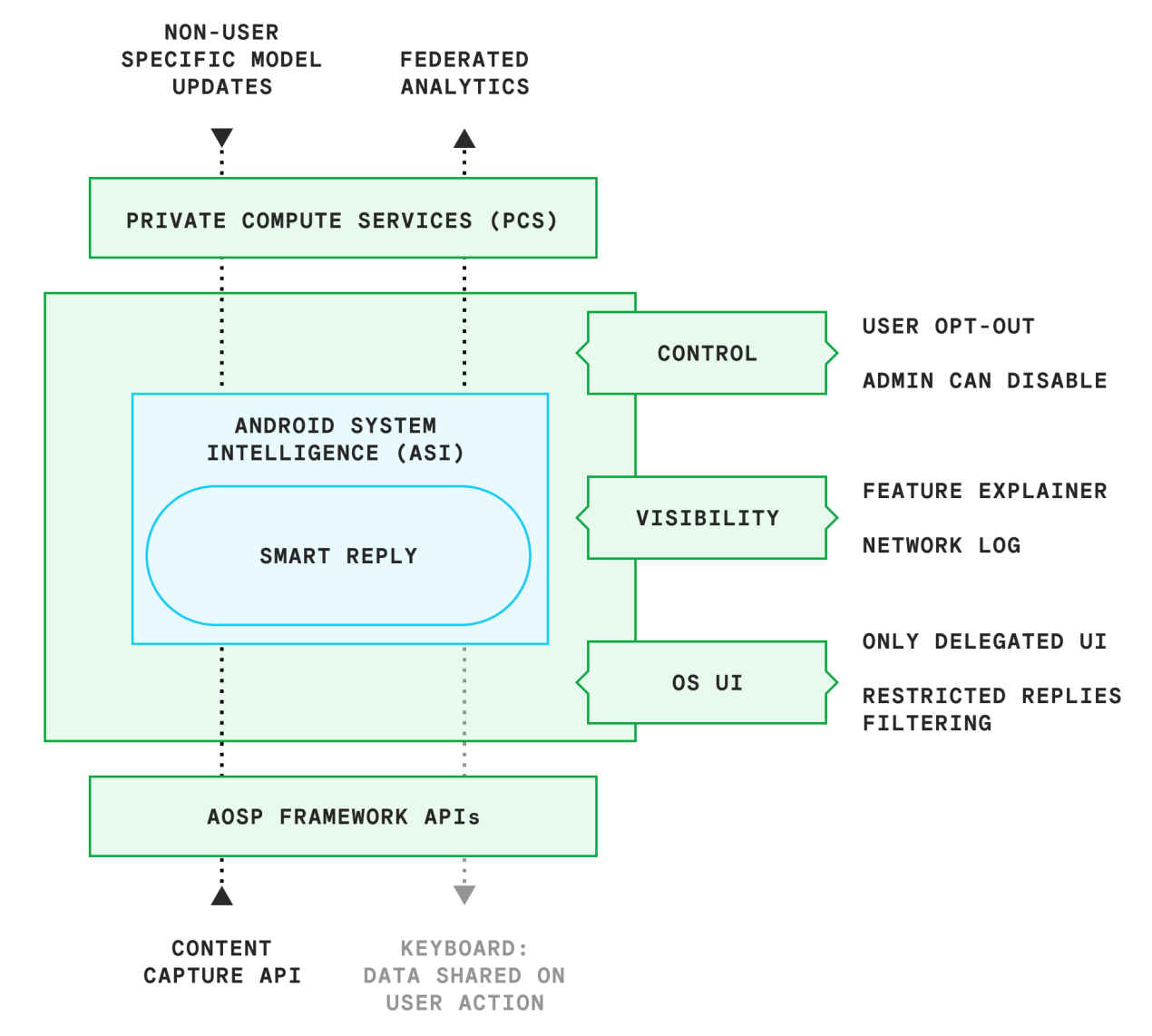

For example, when typing in a conversation, Google explains that Gboard will ask Smart Reply to make suggestions based on the conversation on screen. Smart Reply then processes the conversation in the PCC securely and confidentially. Sensitive data is not shared with the app, the keyboard, or Google, and all Gboard gets in response is a list of suggested replies.

Anything processed inside the Compute Core can also only access the network by interacting with PCS, which strip out identifying information and uses privacy technologies, including Federated Learning, Federated Analytics, and Private Information Retrieval. This abstracts the internet connection permission away from sensitive functions and will only work through “very narrow, purposeful APIs” to do things like “download models, use federated learning, and more.”

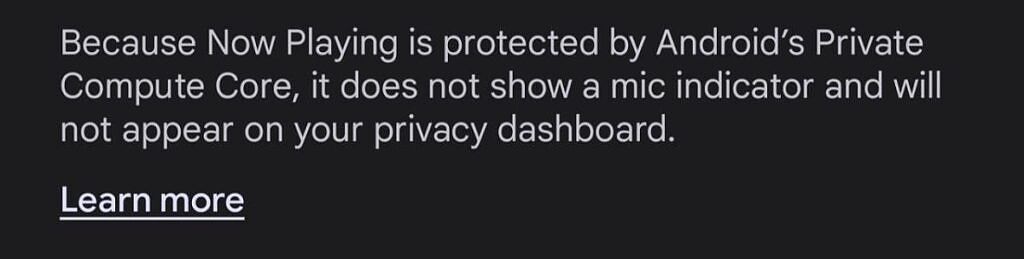

But is the PCC active on Android smartphones in the way that Google has explained it will be? Nobody can say. My gut feeling is that the “development preview” exists for very specific functionality and nothing more, as it’s advertised as being active even on the official Android 12 website. This would also make sense if it’s why it hasn’t been open-sourced yet, as it seems it may only work for a set of proprietary Google features. This is further supported by the fact that Now Playing can bypass the microphone indicator because it runs through the Compute Core.

Data stored and processed within this sandbox isn’t exposed to other apps unless the user says otherwise. For example, a Smart Reply suggestion will remain hidden from your keyboard and the app you’re typing into until you tap on it. PCS not only bridge the gap between the PCC and your smartphone but also keep those features updated with new AI-based models and changes.

How Smart Reply, Live Caption, and Screen Attention work

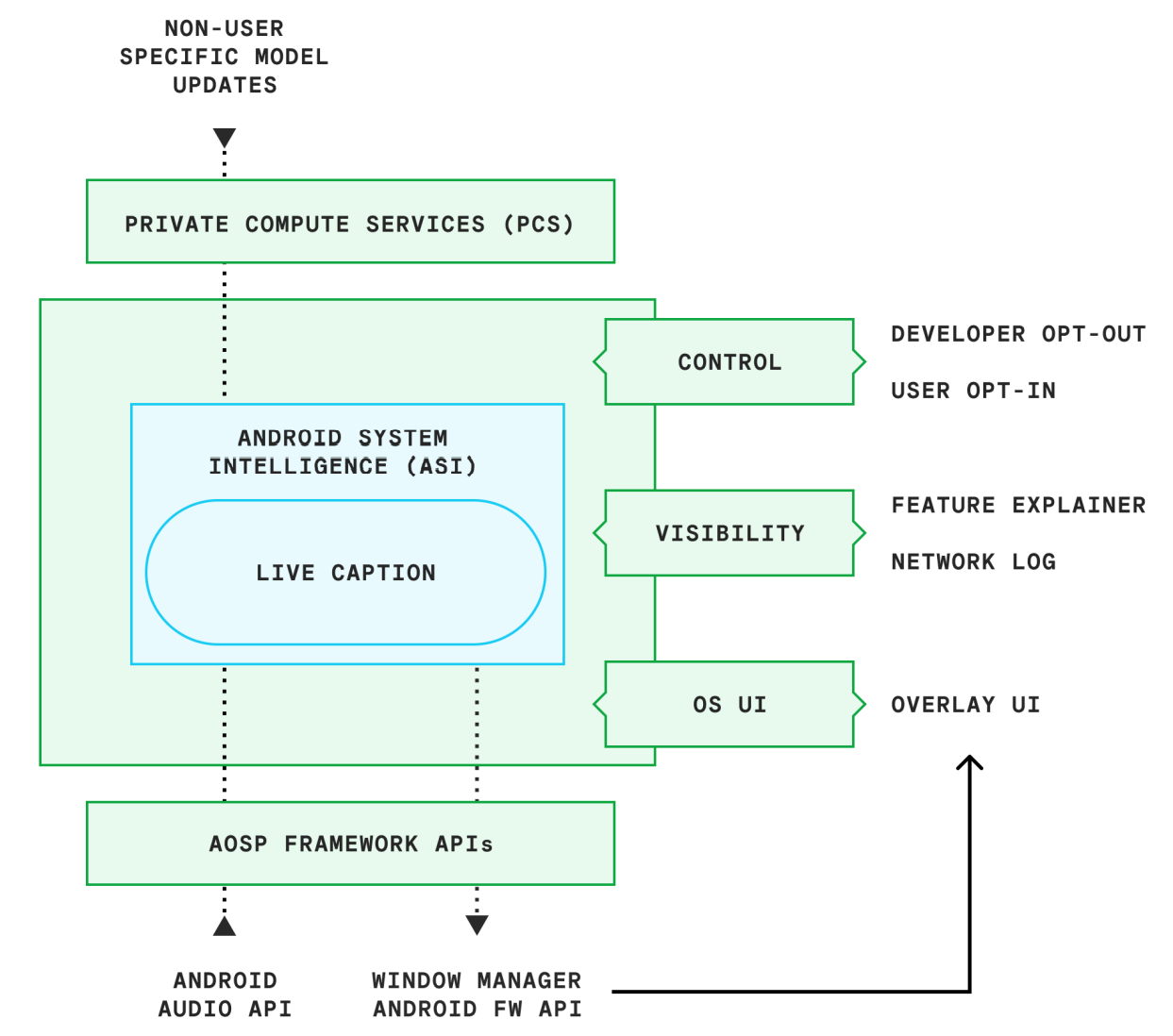

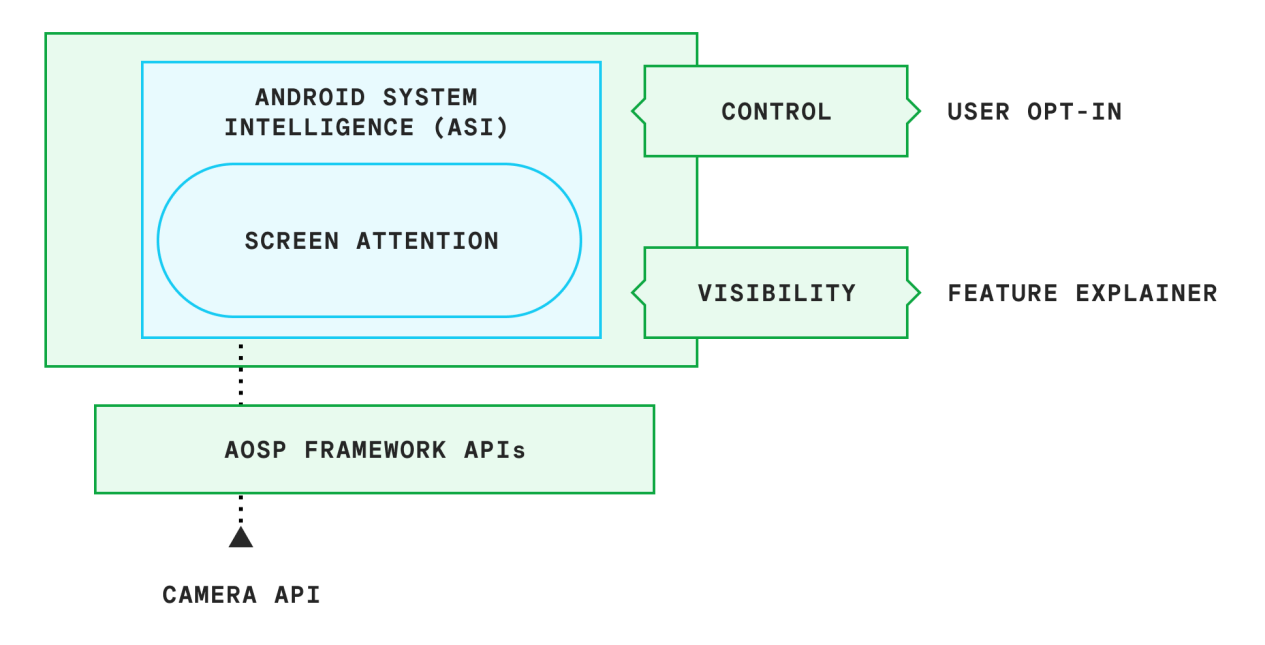

Google has outlined in the technical white paper it released how three features of Android System Intelligence work in the context of the PCC.

Smart Reply

Smart Reply suggests quick responses to messages based on previous and current on-screen content. Android System Intelligence extracts relevant entities from apps such as addresses, names, and other pieces of information, and then offers them to the user as suggestions. These suggestions are made possible via the PCC. Google takes the following steps to implement the feature safely and securely.

- Uses the Content Capture API as a data source, which is an Android Framework API with limits on access.

- Users and app developers can opt out of Smart Replies.

- Device administrators can disable this feature by using a policy that disables screen capture.

-

Users can specifically allow the data to leave the PCC.

- No data leaves the PCC boundary at rendering time as it uses a delegated UI via a specific Android Framework API.

- Candidate filtering by input is disabled after a series of keystrokes due to the keyboard's ability to obtain how many candidate responses are displayed.

- Data is held for a short time in the PCC, meaning that suggestions are based on data observed recently

- Data is only obtained from apps that the PCC understands how to read from, made possible by an allowlist in the system

-

Uses PCS APIs for network access

- ML models are non-user specific

- Analytics is performed through federated analytics with secure aggregation

Live Caption

Live Caption provides captions for any content currently playing on your smartphone, processing all audio and displaying a transcript in an AOSP-rendered UI. The data is not accessible to apps. Google takes the following steps to implement the feature safely and securely.

- Uses the Android Audio APIs as a data source, which is an Android Framework API with limits on access.

- This feature has to be enabled by the user and is not enabled by default.

-

Data does not leave the PCC and is only rendered in a system surface

- It's displayed using an overlay drawn using the Window Manager API

- Data is held for a short time period in the PCC

-

Uses PCS APIs for network access

- Model updates are non-user specific

Screen Attention

Screen Attention keeps the display active while the user is looking at their phone if they are looking at it when screen dimming is scheduled to happen. If a face is detected, then the dimming is postponed. Google takes the following steps to implement the feature safely.

- Uses the Android Audio APIs as a data source, which is an Android Framework API with limits on access

- This feature has to be enabled by the user and is not enabled by default

- Data does not leave the PCC and is only processed within the PCC and the OS through the AttentionManagerService Framework API. Data is only held for a short period of time

- Does not use any of the network capabilities. Models are only updated via APK

Is the Private Compute Core a Pixel exclusive?

This is where things get really complicated.

The PCC has never been explicitly marketed as a Pixel-exclusive feature. It's on the official Android website, and Google talks about PCC in the context of Android — not in the context of Pixels. Having said that, monet was technically a Pixel-exclusive at one point. The only difference is that Google said that monet would be pushed to AOSP in a future release of Android, and now OEMs can implement it themselves. The wording in relation to the PCC is ambiguous, though, and makes a vague reference to "features provided by Android System Intelligence as implemented in Pixel and potentially other devices for Android 12."

From what I can gather, it would seem that at the very least, Android System Intelligence is in use on other devices. My Samsung Galaxy S22 Ultra has Android System Intelligence installed, though if it implements those features that Google talks about through the Private Compute Core isn't exactly clear.

The Private Compute Core makes a lot of sense for enterprise users

We would love for Google to make information more readily available in relation to the PCC and how it protects user privacy, especially in how it relates to other devices. Is the PCC a Pixel exclusive? Can other OEMs implement it? At the moment, it's hard to say, even though the idea behind it is good. It can be a useful asset in protecting smartphone users, too, especially those who may use their devices for enterprise but are disturbed by more "invasive" features such as Now Playing and Smart Reply.

The other aspect to consider is whether new features will be introduced over time. While they obviously can be, it doesn't look like (from the white paper, anyway) anything new has really come to the PCC in a year. While not everything needs to be routed through it if it doesn't need to be, users will obviously prefer to have their data protected when it can be.