Key Takeaways

- The Nvidia GeForce GTX 480 was a technological blunder, with its high price, massive power draw, and excessive heat and noise, making it one of Nvidia's worst GPUs ever launched.

- The AMD Radeon R9 FURY X 4 GB faced challenges in performance, pricing, and power consumption, making it less attractive compared to Nvidia's offerings at the time.

- The Nvidia GeForce RTX 4060 Ti 8GB was a significant disappointment, lacking improvement in performance compared to its predecessor and relying on unreliable selling points like DLSS 3.0 and insufficient VRAM.

It's not difficult to get a bad GPU. It just needs to have poor value, some kind of crippling drawback, or simply be a disappointment. There are plenty of cards that embody one or two of these characteristics, but every once in a while, we bear witness to magical moments when a graphics card hits all three.

Whether we're talking about the best GPUs of all time or the worst, it's pretty hard to narrow down the winners (or losers in this case) to just seven. Thankfully, I'm something of a connoisseur in awful graphics cards and PC hardware in general, so here are my picks for the worst GPUs ever launched.

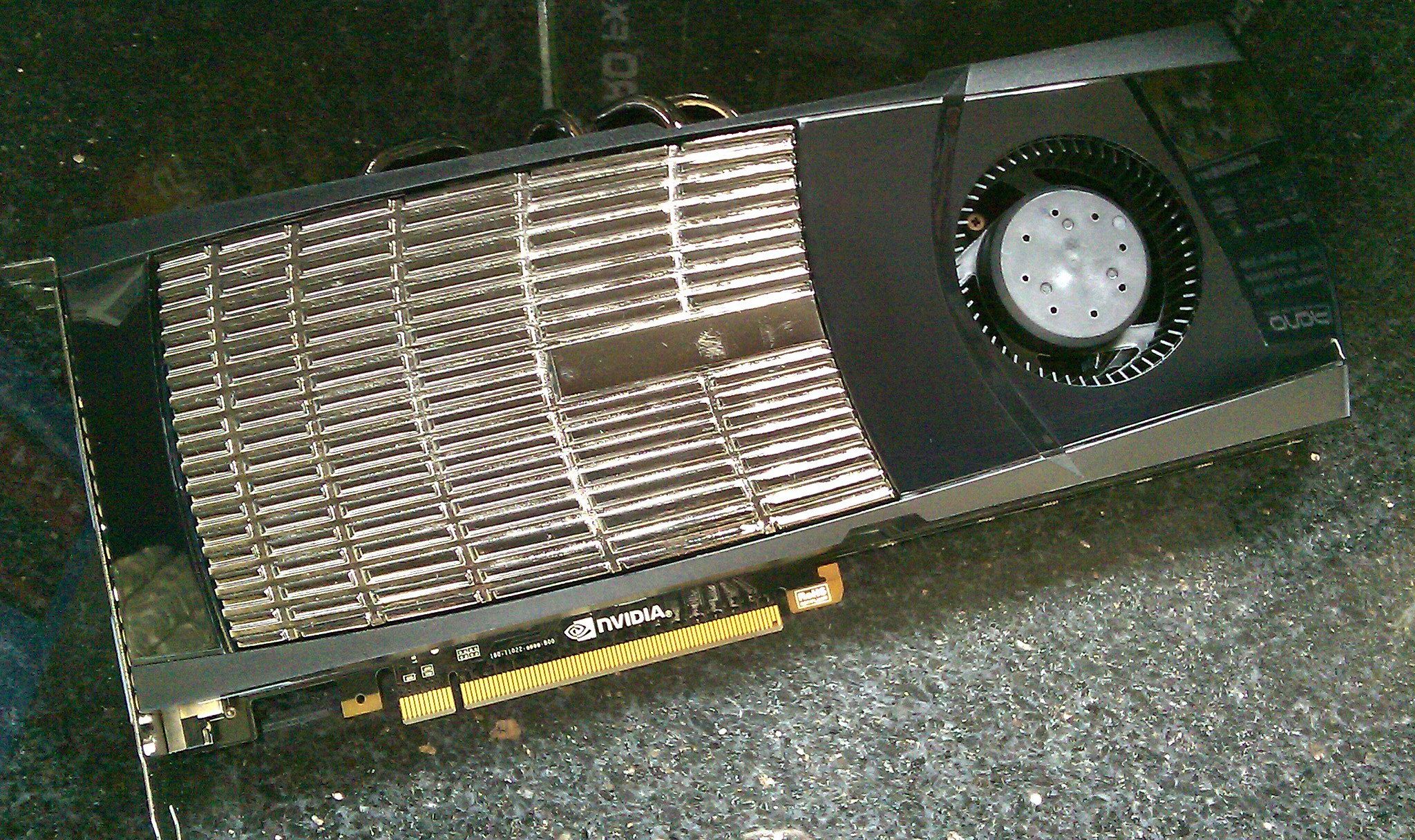

1 Nvidia GeForce GTX 480

The 'G' in GPU stands for grill

In the decade following the emergence of the modern graphics card, there actually weren't too many bad ones, and when there were, they had a minimal impact since the industry was moving so quickly back then. It was pretty common to see next-generation GPUs beat their predecessors by over 50%, and 100% or more wasn't rare. But by 2010, things were getting more complicated, particularly for Nvidia. It was trying to make a massive GPU on TSMC's 40nm, which was sort of broken. The end result was Nvidia's worst technological blunder ever: Fermi, which powered the GTX 480.

Because the 400 series had to be delayed due to those difficulties, AMD had taken the performance crown from Nvidia with its Radeon HD 5000 series in late 2009 and held it for six months. The GTX 480 did reclaim the crown with a 10% lead over the Radeon HD 5870, but at a terrible price: $500, compared to the HD 5870's $380. And that was just the literally terrible price; it got even more expensive considering the GTX 480's massive power draw. In Anandtech's testing, the card consumed well over 200W under load and hit over 90 degrees Celsius. If you've ever heard of "the way it's meant to be grilled," this is what it was referring to.

Suffice it to say, the GTX 480 didn't cut it despite its performance. It was way too hot, way too loud, and way too expensive. Nvidia quickly rushed out the GTX 500 series just half a year later, taking advantage of the 40nm node's increased maturity and architecture level improvements to increase efficiency. A processor is undeniably bad if it has to be replaced after months rather than years, and the GTX 400 series is one of the shortest-lived product lines in GPU history.

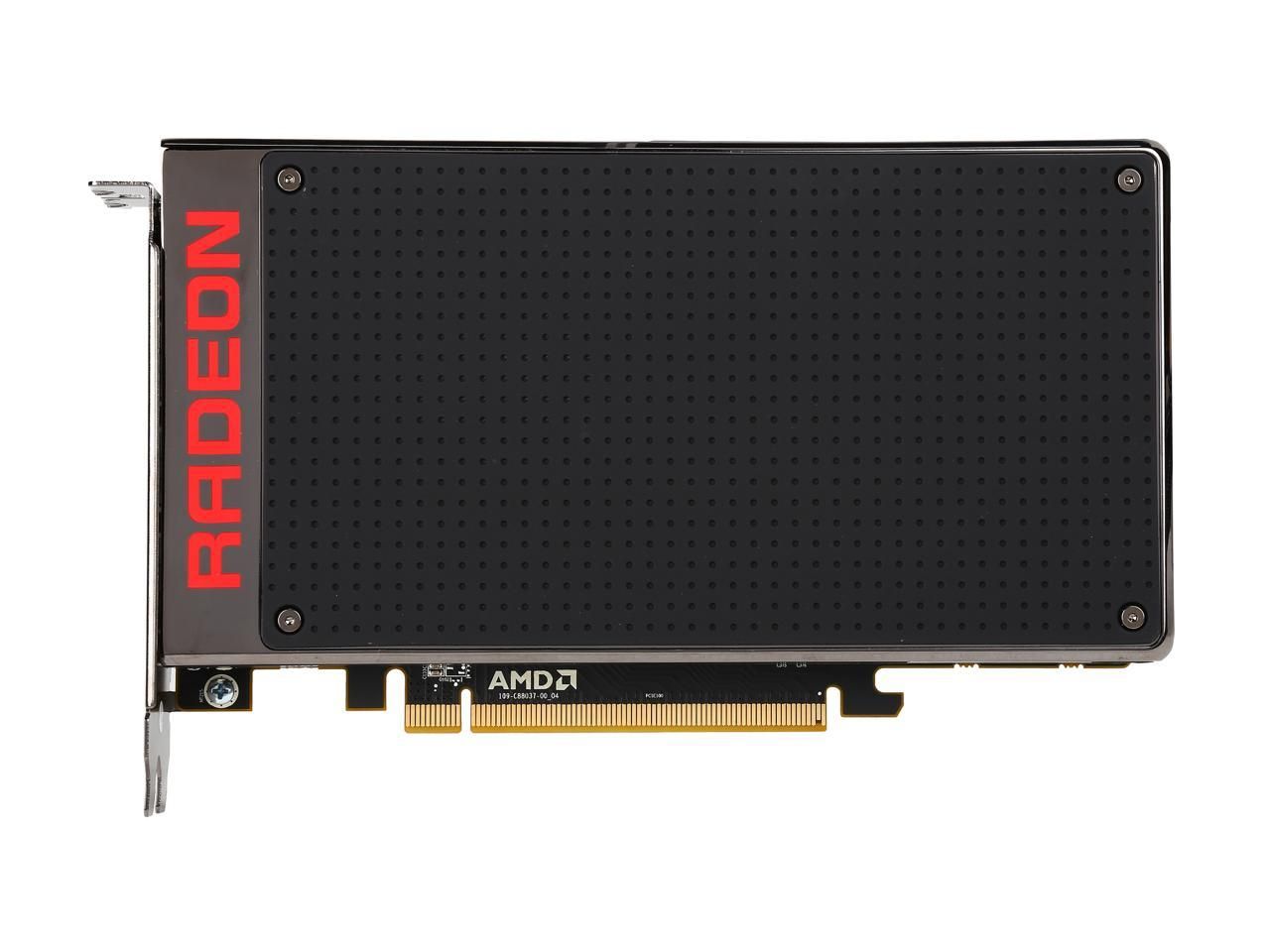

2 AMD Radeon R9 FURY X 4 GB

The Fury X 4 GB was not the GPU AMD needed to launch in 2015

The Radeon R9 Fury X, released by AMD in June 2015, faced considerable challenges that contributed to its mixed reception within the graphics card market. Positioned as a high-end GPU, its performance did not match up to the competition, particularly against Nvidia's offerings at the time.

The pricing of the Fury X was relatively high due to its elevated manufacturing costs, and users found alternative options that provided better performance for a similar or lower price. This factor, coupled with its performance limitations, made it less attractive to consumers seeking a powerful and cost-effective graphics solution.

One significant limitation of the R9 Fury X was its 4GB High Bandwidth Memory (HBM), which, while innovative, proved insufficient for some users as games and applications became more graphically demanding. The closed-loop liquid cooling solution, although efficient, received criticism for pump noise and limited flexibility for users wanting to customize their cooling setups. Additionally, the card's relatively high power consumption contributed to concerns about heat and noise, detracting from its overall appeal.

Nvidia's strong offerings, such as the GTX 980 Ti and later the GTX 10 series, often outperformed or matched the Fury X at a similar or lower price point. This made it challenging for AMD's flagship card to stand out in a market where performance, pricing, and features played crucial roles in consumer choices. While the Radeon R9 Fury X tried to innovate in a competitive market by bringing new features like HBM and using a closed-loop cooling solution, these various factors collectively contributed to its reputation as a less successful option within its release timeframe.

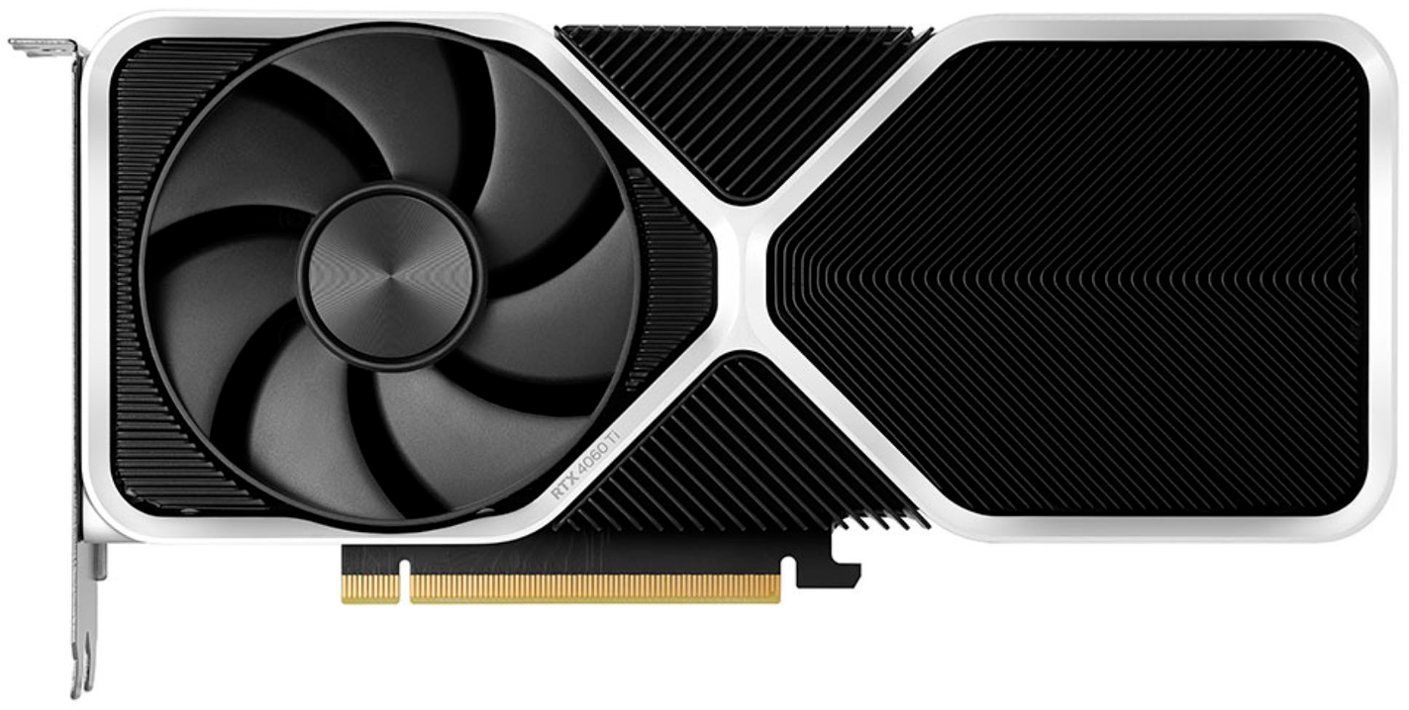

3 Nvidia GeForce RTX 4060 Ti 8GB

Budget gamers' broken dream

You might be surprised to see such a recent GPU on this list, but the Nvidia GeForce RTX 4060 Ti 8GB stands out as a significant disappointment in the realm of modern graphics cards, primarily due to its lackluster performance compared to its predecessor, the RTX 3060 Ti. One of the critical issues plaguing the RTX 4060 Ti is its lack of improvement in performance, rendering it nearly indistinguishable from the older model in real-world use. You may even find benchmark results in which the older RTX 3060 Ti outperforms the newer RTX 4060 Ti. This results in a perplexing situation where consumers are left questioning the necessity of an upgrade to the supposedly newer and improved GPU.

Nvidia's attempt to salvage the RTX 4060 Ti's reputation by highlighting its DLSS 3.0 capability falls short of providing a compelling reason for users to invest in this graphics card. While DLSS 3.0 is indeed a technological advancement, its limited support across various games and inconsistent performance gains in diverse scenarios make it an unreliable selling point. The reliance on artificial frame generation further adds to the skepticism surrounding its practical benefits, undermining the GPU's overall appeal.

Adding to the litany of issues, the RTX 4060 Ti's 8GB memory buffer proves inadequate for delivering satisfactory performance in 1440p and 4K gaming scenarios, a critical shortcoming given its hefty $400 price tag. This lack of sufficient VRAM not only diminishes its value for money but positions the RTX 4060 Ti as one of the worst graphics cards in recent memory. In a market driven by innovation and performance improvements, the RTX 4060 Ti's failure to live up to expectations highlights the pitfalls that can occur when incremental upgrades overshadow substantive advancements.

4 AMD Radeon VII

The forgotten AMD flagship

Although the RTX 20 series was by no means a great product line, it still put AMD even further behind because the new RTX 2080 Ti was a full 30% faster than the GTX 1080 Ti, and AMD's RX Vega 64 only matched the GTX 1080 (sans Ti). Something had to be done, but AMD's upcoming cards using the 7nm Navi design wouldn't be available until mid-2019. But then someone at AMD realized something: They already had a 7nm GPU, a 7nm version of Vega made for data centers. It wasn't going to beat the 2080 Ti, but something is better than nothing, right?

Well, the Radeon VII probably would've been better off not existing. Like the 14nm Vega 56 and 64, it was pretty inefficient and although it roughly matched the RTX 2080, it did so while consuming about 70 more watts. It didn't support ray tracing or upscaling technology either, and the cherry on top was its $700 price tag, which was the same as the RTX 2080.

But perhaps the worst thing for AMD was that since this was a data center GPU, it came with 16GB of extremely expensive HBM2 memory. AMD probably lost money on the Radeon VII and it wasn't even a good GPU!

Because the RX 5700 XT launched just a few months later at $400 and had about 90% of the performance of the Radeon VII, this former GPU flagship has been largely forgotten, and for good reason. It didn't add anything new and its name was awful. Seriously, Radeon VII is a terrible product name. I guess AMD is pretty embarrassed about the whole thing because its official product page has since been deleted from the company's website.

5 Nvidia Titan Z

Dual-GPU realm failure

The monstrous Nvidia GeForce Titan Z, released in 2014, struggled to gain traction in the market from the very beginning, due to a combination of factors. One of the most significant hurdles was its exorbitant price, which stood at a whopping $3,000 at launch. This made the Titan Z prohibitively expensive for the majority of gamers and even some professionals, limiting its market reach. The issue of the high price point was further aggravated by the availability of other powerful graphics cards from Nvidia, such as the Titan Black and the GTX 780 Ti, which offered competitive performance at a fraction of the cost.

In addition to the pricing issue, the Titan Z suffered from its dual-GPU design based on the aging Kepler architecture. By 2014, newer architectures, such as Maxwell, were gaining prominence in the market, offering improved performance and power efficiency. The Titan Z's reliance on Kepler made it less appealing to consumers looking for cutting-edge technology, as they could opt for more advanced and cost-effective alternatives.

Furthermore, the Titan Z faced challenges related to its limited market appeal. Positioned as a dual-GPU card catering to both gaming enthusiasts and professionals in fields like content creation, the card failed to carve out a compelling niche. Professionals often sought more specialized solutions, and gamers could find better value in other graphics cards. The Titan Z's design, which resulted in higher power consumption and heat, also posed practical challenges for users, especially those with smaller computer cases. These factors collectively contributed to the Titan Z's commercial struggles and its classification as a market flop.

6 Nvidia GeForce GT 1030 DDR4

Gamers thought Nvidia was joking

The entry-level Nvidia GeForce GT 1030 DDR4 has earned quite a negative reputation due to a controversial switch in memory type from the faster GDDR5 to the much slower DDR4, all while retaining the same product name and model number. This switch had a profound impact on the GPU's performance, particularly in terms of memory bandwidth, a critical factor for graphics processing. The GDDR5 variant boasted an acceptable 48 GB/s of memory bandwidth, making it suitable for light gaming and multimedia tasks. However, the transition to DDR4 saw a drastic reduction to a mere 16 GB/s, causing certain games to experience a significant drop in performance, sometimes by as much as half.

The decision to make such a fundamental change without transparent communication with consumers only exacerbated the negative perception of the GT 1030 DDR4. The gaming community, expecting a certain level of performance based on the initial specifications, felt deceived by Nvidia's decision to cut costs without adequately informing buyers. The lack of clarity and the disparity between marketed and actual performance underscored the importance of transparency in the tech industry and contributed to the GT 1030 DDR4 being labeled as one of the worst bait-and-switch cases in the GPU industry.

While the GT 1030 was not a gaming powerhouse by any stretch of the imagination, it was still a decent entry-level card fit for basic gaming and multimedia tasks. The resulting impact on gaming performance, coupled with the lack of transparent communication about the change, led to disappointment and frustration among consumers who felt they had purchased a product that did not live up to its advertised capabilities.

7 Nvidia GeForce FX 5800

An unaffordable entry-level card

When we talk about loud graphics cards, no GPU in history springs to mind quite like the Nvidia GeForce FX 5800. This graphics card, notorious for its primary flaw, was plagued by an overheating issue that necessitated an aggressive and noisy cooling solution. The card's thermal output was substantial, and to mitigate the heat, Nvidia implemented a cooling fan that operated at an exceptionally high volume. The result was an auditory assault, making the GeForce FX 5800 one of the loudest graphics cards of its time.

The noise level was so notorious that Nvidia themselves acknowledged it in a joke video, where they humorously compared the card to various ear-splitting objects. In a tongue-in-cheek attempt to illustrate the card's decibel level, Nvidia whimsically likened it to a hair dryer, and a leaf blower, and even went as far as using it as a makeshift grill to cook a couple of sausages, showcasing the absurdity of its noise and thermal output.

This excessive noise not only became a source of frustration for users but also posed a significant usability challenge. The loud fan not only affected the gaming experience but also made the card impractical for quiet computing environments or professional settings where noise levels were a critical consideration. The joke video, while light-hearted, served as a testament to the extreme measures Nvidia had to resort to in order to manage the thermal challenges of the GeForce FX 5800. In the end, the combination of high temperatures, an inefficient cooling solution, and the resulting cacophony of noise made the GeForce FX 5800 a symbol of poor design and a cautionary tale in the history of graphics card development.

The competition for the worst graphics card is going to get even fiercer

Things aren't quite as gloom and doom with the latest generation of GPUs as I thought they would be. I once said the RTX 4070 might cost as much as the RX 7900 XTX (it's $400 cheaper) and that the RTX 4060 would be at least $400 (it's $300). But even though value isn't getting worse, the fact that it's not getting better is still quite terrible. It's also quite serious when entry-level GPUs now start at about $300 when they used to start at as little as $100. Desktop PC gaming is quickly becoming unaffordable.

The days of good generation-to-generation improvements in value have long since passed; we'll have to settle for maybe a 10% improvement in bang for buck every year or two. AMD and Nvidia are in a race to the bottom to see which company can get people to pay the most for the worst graphics card. If you can afford the ever-increasing premiums for good graphics cards, then that's great. But for everyone else who can't shell out hundreds of dollars for the same performance we got last generation, it just stinks.